AI Doesn't Really Learn: Understanding The Implications For Responsible Use

Table of Contents

AI: Sophisticated Pattern Recognition, Not True Learning

At its core, AI is incredibly sophisticated at recognizing patterns within vast datasets. It uses statistical analysis and complex algorithms to identify correlations and make predictions. However, this process is fundamentally different from human learning. While we learn through understanding context, making inferences, and applying knowledge to novel situations, AI operates based on identifying patterns in data without genuine comprehension.

- AI excels at tasks requiring pattern recognition: Think image recognition, language translation, and even playing complex games like chess or Go. AI can outperform humans in these areas because it can process and analyze data at a scale unimaginable to the human brain.

- AI lacks genuine comprehension and reasoning abilities: While an AI might accurately translate a sentence, it doesn't understand the nuances of meaning or the context within which that sentence is spoken. It's simply matching patterns based on its training data.

- AI operates based on algorithms and pre-programmed instructions, not conscious understanding: AI doesn't possess consciousness, self-awareness, or the capacity for genuine understanding. It's a powerful tool, but it's not a sentient being.

The Implications of Misunderstanding AI's Capabilities

Anthropomorphizing AI – attributing human-like intelligence and consciousness to it – is a dangerous misconception. This can lead to inflated expectations and unrealistic applications of AI technology. Overestimating AI's capabilities can have severe consequences.

- Over-reliance on AI without human oversight can lead to errors and biases: AI systems are only as good as the data they are trained on. Biased data will lead to biased results, perpetuating and even amplifying existing societal inequalities.

- Misunderstanding AI's limitations can lead to flawed decision-making: Relying solely on AI predictions without critical human evaluation can lead to poor decisions with significant real-world consequences.

- The perception of AI as a self-learning entity can hinder critical evaluation of its output: If we assume AI is infallible, we are less likely to question its results and identify potential errors or biases.

Ethical Considerations and Responsible AI Development

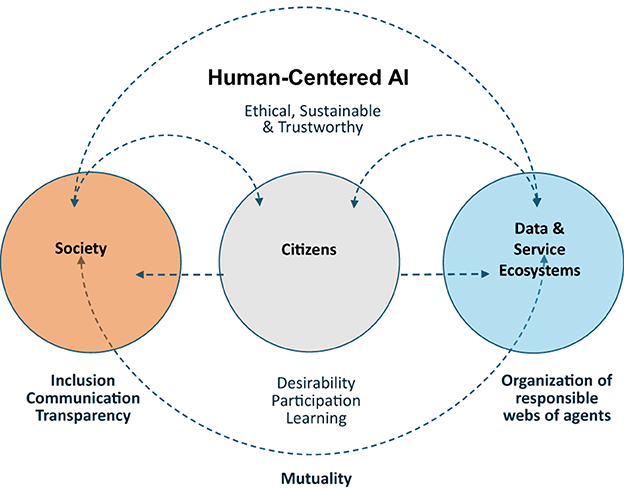

Transparency and explainability are paramount in AI systems. We need to understand how an AI arrives at a particular decision to ensure accountability and identify potential problems. Robust testing and validation are also critical to mitigating risks and biases. Human oversight and control remain essential in all AI applications.

- Prioritize fairness and avoid bias in data collection and algorithm design: Careful attention must be paid to the data used to train AI systems to ensure they are fair and unbiased.

- Implement mechanisms for accountability and redress in case of AI-related errors: Clear guidelines and processes are needed to address instances where AI systems make mistakes or cause harm.

- Promote ethical guidelines and regulations for responsible AI development and deployment: Collaboration between researchers, policymakers, and ethicists is crucial to establishing ethical frameworks for AI.

The Future of AI: A Focus on Collaboration, Not Replacement

The future of AI isn't about replacing humans; it's about collaboration. By leveraging AI's strengths in pattern recognition and data processing, while retaining human judgment, critical thinking, and creativity, we can achieve far better outcomes.

- AI can augment human capabilities, but not replace human judgment entirely: AI can be a powerful tool to assist humans in decision-making, but it should not replace human oversight and responsibility.

- Focus on developing AI systems that support and enhance human decision-making: AI should be designed to complement human abilities, not replace them.

- Promote interdisciplinary collaboration between AI researchers, ethicists, and policymakers: A collaborative approach is essential to ensure the ethical and responsible development and deployment of AI.

Conclusion: Understanding "AI Doesn't Really Learn" for a Better Future

This article has highlighted the critical distinction between AI's pattern recognition capabilities and true human learning. Understanding that AI doesn't really learn is not about diminishing AI's potential, but rather about harnessing its power responsibly. Ethical considerations, transparency, and human oversight are paramount to ensure that AI benefits humanity. Let's engage in critical discussions about AI's limitations and potential, promoting further research and responsible development. Understanding that AI doesn't really learn is vital for shaping a future where AI benefits humanity ethically and responsibly. Let's work together to ensure this happens.

Featured Posts

-

Detroit Vs New York Mlb Prediction And Betting Analysis

May 31, 2025

Detroit Vs New York Mlb Prediction And Betting Analysis

May 31, 2025 -

Building A Good Life Strategies For Wellbeing And Success

May 31, 2025

Building A Good Life Strategies For Wellbeing And Success

May 31, 2025 -

Slight Uptick In Covid 19 Cases In India Global Xbb 1 16 Variant Impact

May 31, 2025

Slight Uptick In Covid 19 Cases In India Global Xbb 1 16 Variant Impact

May 31, 2025 -

Why Ai Doesnt Learn And How This Impacts Its Responsible Application

May 31, 2025

Why Ai Doesnt Learn And How This Impacts Its Responsible Application

May 31, 2025 -

Wherry Vets Suffolk Planning Application Successful

May 31, 2025

Wherry Vets Suffolk Planning Application Successful

May 31, 2025