AI's Learning Limitations: Promoting Responsible AI Use

Table of Contents

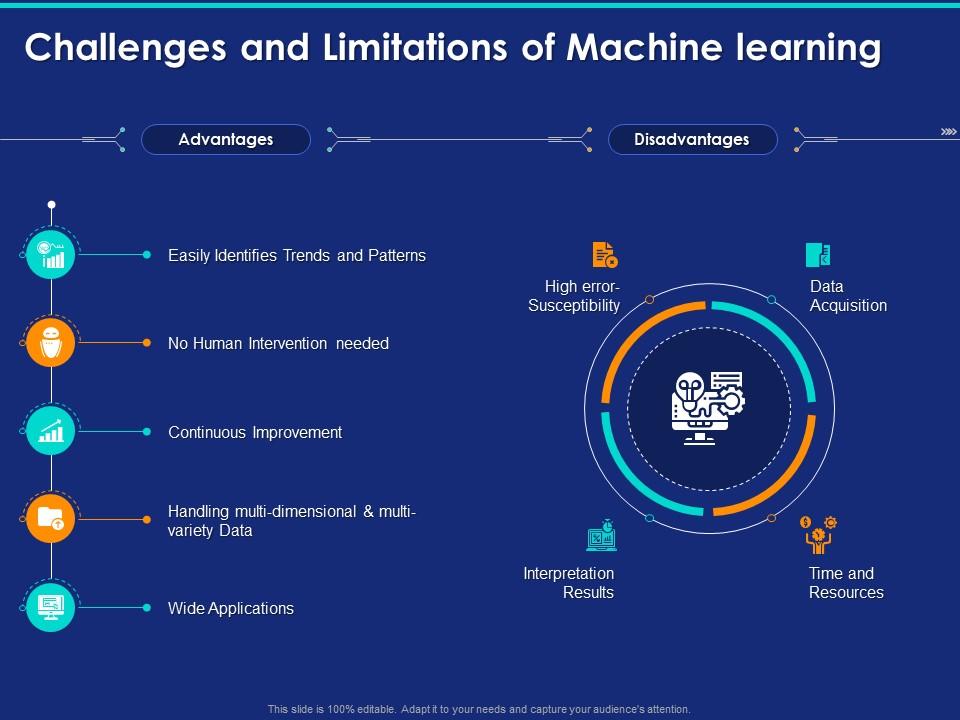

Artificial intelligence (AI) is transforming our world at an unprecedented pace, yet its inherent learning limitations pose significant challenges demanding responsible development and deployment. This article focuses on AI's learning limitations, exploring key areas where current AI systems fall short and advocating for ethical and responsible AI practices. Our goal is to understand these limitations and promote the development and use of AI that benefits all of humanity.

<h2>Data Bias and its Impact on AI Learning</h2>

Data bias, a pervasive issue in AI, refers to the systematic errors or inaccuracies in datasets used to train AI models. These biases often reflect existing societal prejudices and inequalities, leading to AI systems that perpetuate and even amplify these problems. The data used to train an AI model is crucial; if the data is biased, the resulting model will likely be biased as well. This is a fundamental aspect of AI's learning limitations.

For example, facial recognition systems trained primarily on images of light-skinned individuals often perform poorly on darker-skinned individuals, leading to misidentification and potentially harmful consequences. Similarly, loan application algorithms trained on historical data that reflects discriminatory lending practices can perpetuate financial inequality by unfairly denying loans to certain demographic groups.

The effects of data bias are far-reaching:

- Inaccurate predictions and unfair outcomes: Biased AI systems make inaccurate predictions and deliver unfair outcomes, leading to discriminatory practices across various sectors.

- Reinforcement of societal inequalities: AI systems, trained on biased data, can reinforce existing societal inequalities and create new ones.

- Erosion of trust in AI systems: Biased AI systems erode public trust and confidence in the technology.

Mitigating bias requires careful attention to data collection, preprocessing, and algorithmic design. Techniques such as data augmentation (adding more diverse data to balance representation) and algorithmic fairness (developing algorithms that explicitly account for and mitigate bias) are crucial steps toward addressing this core limitation of AI learning.

<h2>The Limits of Generalization in AI Models</h2>

Generalization, a critical aspect of AI's performance, refers to an AI model's ability to perform well on unseen data – data it hasn't encountered during its training. Achieving true generalization remains a significant challenge in AI. Many AI models excel at tasks they've been specifically trained for but struggle when faced with novel situations or slightly altered inputs. This limitation significantly restricts the real-world applicability of many AI systems.

For instance, an AI model trained to identify cats in a specific setting (e.g., brightly lit images of domestic cats) might fail to recognize cats in different settings (e.g., dimly lit images of wild cats). This failure to generalize highlights a crucial limitation in current AI systems.

Several factors affect generalization:

- Size and quality of the training data: Larger, more diverse, and higher-quality datasets generally lead to better generalization.

- Complexity of the AI model: Overly complex models can overfit the training data, leading to poor generalization.

- The nature of the problem being solved: Some problems are inherently more challenging to generalize than others.

Approaches like transfer learning (applying knowledge gained from one task to another) and domain adaptation (adapting a model trained on one dataset to perform well on another) are being actively explored to enhance generalization capabilities and address this significant aspect of AI's learning limitations.

<h2>Explainability and Transparency in AI Systems</h2>

Many AI models, particularly deep learning models, operate as "black boxes," making it difficult to understand their decision-making processes. This lack of transparency poses a significant challenge for trust and accountability. Understanding why an AI system arrived at a particular decision is crucial, especially in high-stakes applications like healthcare and finance. This is a fundamental aspect of AI's learning limitations. The need for explainable AI (XAI) is paramount.

The consequences of lacking transparency are severe:

- Difficulty in debugging and improving AI models: Understanding the reasoning behind errors is essential for improving AI systems.

- Reduced user trust and adoption: Lack of transparency hinders user trust and limits the widespread adoption of AI.

- Increased risk of misuse and malicious applications: Opaque AI systems are more susceptible to misuse and malicious applications.

Techniques like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) aim to improve the explainability of AI systems by providing insights into their internal workings. However, developing truly transparent AI systems remains an ongoing challenge.

<h2>Addressing AI's Learning Limitations: Promoting Responsible AI Development</h2>

Addressing AI's learning limitations requires a multi-faceted approach centered on responsible AI development and deployment. This necessitates the adoption of ethical guidelines and best practices throughout the entire AI lifecycle. Interdisciplinary collaboration among AI researchers, ethicists, policymakers, and other stakeholders is crucial to ensure that AI systems are developed and used responsibly.

Promoting responsible AI use involves:

- Prioritizing fairness and inclusivity in AI design: Addressing bias and ensuring equitable outcomes for all.

- Ensuring data privacy and security: Protecting sensitive data used to train and operate AI systems.

- Establishing clear accountability mechanisms: Determining responsibility for the actions and outcomes of AI systems.

- Promoting education and public awareness about AI: Educating the public about AI's capabilities and limitations.

Rigorous testing and validation of AI systems are essential to identify and mitigate potential biases, errors, and unforeseen consequences.

<h2>Conclusion: Moving Forward with Responsible AI</h2>

The key learning limitations of AI – data bias, generalization limitations, and lack of transparency – pose significant challenges to the responsible development and deployment of AI. Addressing these limitations is not merely a technical problem but a societal imperative. By understanding AI's learning limitations and actively participating in the responsible development and deployment of AI, we can harness its transformative power while mitigating its potential harms. Learn more about responsible AI practices and join the conversation today!

Featured Posts

-

Munguia Dominates Sucher Avenges Previous Knockout Loss

May 31, 2025

Munguia Dominates Sucher Avenges Previous Knockout Loss

May 31, 2025 -

Unsolved Mystery Banksy Paintings Origin And Tagger

May 31, 2025

Unsolved Mystery Banksy Paintings Origin And Tagger

May 31, 2025 -

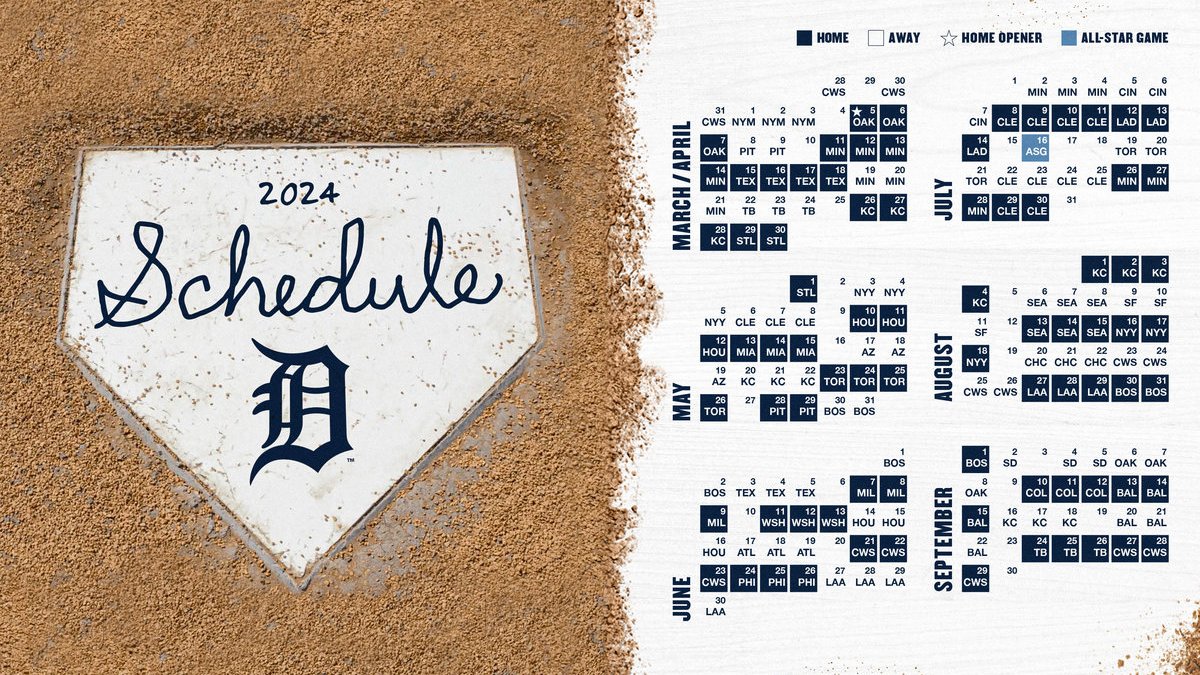

Detroit Tigers 2025 Opening Day Clash With Chicago White Sox At Comerica Park

May 31, 2025

Detroit Tigers 2025 Opening Day Clash With Chicago White Sox At Comerica Park

May 31, 2025 -

Covid 19 Cases Surge World Health Organization Points To New Variant

May 31, 2025

Covid 19 Cases Surge World Health Organization Points To New Variant

May 31, 2025 -

East London Blaze 125 Firefighters At Peak Of Operation

May 31, 2025

East London Blaze 125 Firefighters At Peak Of Operation

May 31, 2025