Algorithm-Driven Radicalization: Holding Tech Companies Accountable For Mass Shootings

Table of Contents

The Role of Algorithms in Amplifying Extremist Content

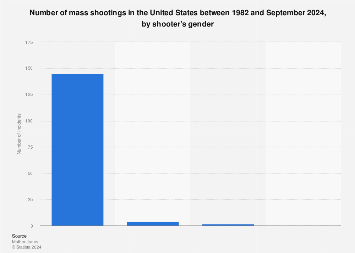

Algorithms, the invisible engines driving our online experiences, play a significant role in amplifying extremist content and fostering environments ripe for radicalization. This happens through two primary mechanisms: echo chambers and targeted advertising.

Echo Chambers and Filter Bubbles

Algorithms, designed to maximize user engagement, often prioritize showing users content similar to what they've previously interacted with. This creates echo chambers and filter bubbles, reinforcing existing beliefs and preventing exposure to diverse perspectives, including counter-narratives to extremist ideologies.

- Engagement over Truth: Many algorithms prioritize clicks and views, regardless of the veracity of the information. This leads to the proliferation of misinformation and hate speech, which can be especially damaging when promoting extremist viewpoints.

- Algorithmic Bias: Studies have shown that algorithms can exhibit biases, inadvertently promoting certain types of content over others. This bias can disproportionately amplify extremist narratives.

- Examples: YouTube's recommendation system has been criticized for pushing users down rabbit holes of extremist content. Similarly, Facebook's algorithms have been implicated in the spread of conspiracy theories and hate speech, creating fertile ground for radicalization.

Targeted Advertising and Radicalization

Targeted advertising, fueled by vast amounts of user data collected by tech companies, allows extremist groups to precisely target vulnerable individuals with tailored messages designed to recruit and radicalize them.

- Data-Driven Targeting: Algorithms track user data, including search history, online activity, and social media interactions, to identify potential recruits for extremist ideologies.

- Personalized Propaganda: This data is then used to deliver highly personalized extremist content, reinforcing existing biases and pushing individuals further down the path to radicalization.

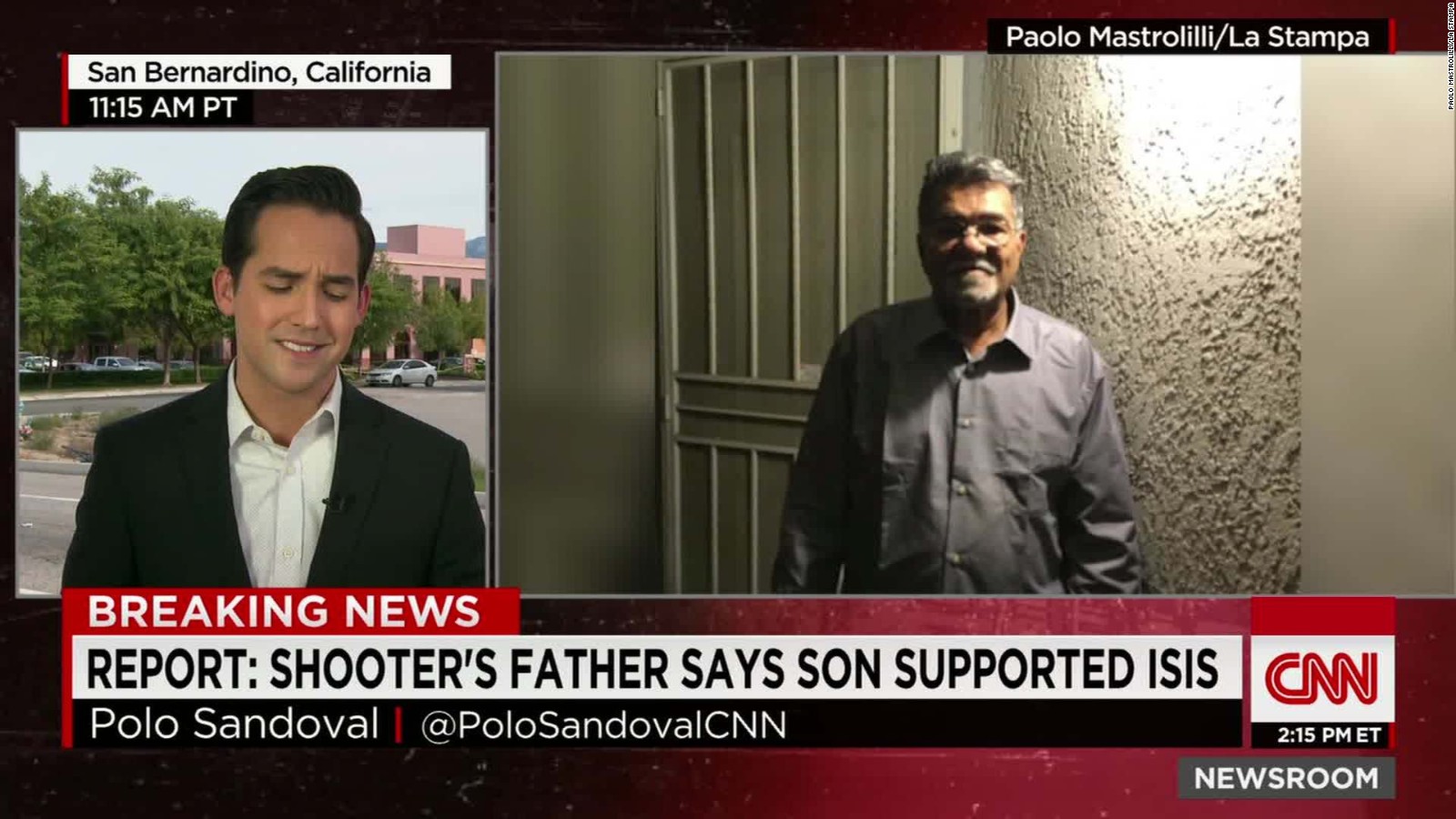

- Research Links: Numerous studies link online radicalization, facilitated by targeted advertising and algorithmic amplification, to real-world violence and acts of terrorism.

The Legal and Ethical Responsibilities of Tech Companies

The legal and ethical responsibilities of tech companies in addressing algorithm-driven radicalization are complex and multifaceted. The debate often centers around Section 230 and the broader ethical obligations beyond legal frameworks.

Section 230 and its Limitations

Section 230 of the Communications Decency Act shields online platforms from liability for user-generated content. While intended to protect free speech and innovation, its limitations have become increasingly apparent in the context of algorithm-driven radicalization.

- Arguments for Reform: Critics argue that Section 230 protects tech companies from the consequences of their algorithms' role in spreading harmful content. They advocate for reforms that would hold platforms accountable for the content they actively promote through their algorithms.

- Arguments Against Reform: Others warn that altering Section 230 could stifle free speech and innovation, hindering the development of online platforms.

- Legal Challenges: The legal landscape surrounding Section 230 and its application to algorithm-driven radicalization is constantly evolving, leading to ongoing legal challenges and uncertainties.

Ethical Obligations Beyond Legal Frameworks

Even if legally protected under Section 230, tech companies have a strong ethical obligation to proactively combat the spread of extremist ideologies on their platforms. This goes beyond simply removing content after it's already caused harm.

- Proactive Measures: Tech companies should invest in advanced content moderation techniques, including AI-powered detection systems, to identify and remove extremist content before it can reach a wide audience.

- Transparency and Accountability: They must be more transparent about how their algorithms work and be held accountable for the impact they have on the spread of extremism.

- Industry Best Practices: Collaboration and the development of industry best practices are crucial for effectively addressing this complex issue.

Practical Steps Towards Accountability

Holding tech companies accountable for algorithm-driven radicalization requires a multi-pronged approach involving improved content moderation strategies, increased transparency, and robust government regulation.

Improved Content Moderation Strategies

More sophisticated and effective content moderation strategies are crucial for identifying and removing extremist content.

- AI-Powered Detection: Investing in advanced AI systems that can effectively identify and flag extremist content in multiple languages and formats is paramount.

- Human Oversight: Human review remains essential to ensure accuracy and prevent censorship of legitimate speech.

- Community Reporting: Encouraging users to report harmful content is a vital component of a comprehensive content moderation strategy.

Increased Transparency and Public Reporting

Greater transparency in algorithms and public reporting of efforts to combat extremism are vital for accountability.

- Algorithmic Transparency: Tech companies should provide more information about how their algorithms work and the criteria used to prioritize content.

- Mandatory Reporting: Mandatory reporting requirements on the volume of extremist content identified and removed would increase accountability.

- Independent Audits: Independent audits of algorithms and content moderation practices could help ensure that companies are fulfilling their responsibilities.

Government Regulation and Oversight

Government regulation plays a crucial role in holding tech companies accountable.

- Targeted Legislation: New legislation is needed to address the unique challenges posed by algorithm-driven radicalization, balancing free speech protections with the need to prevent violence.

- International Collaboration: International collaboration is crucial, as extremist ideologies often transcend national borders.

- Public-Private Partnerships: Effective solutions will require collaboration between government agencies, tech companies, and civil society organizations.

Conclusion

Algorithm-driven radicalization plays a significant role in fueling online extremism and contributing to real-world violence, including mass shootings. Tech companies, through their algorithms and targeted advertising practices, bear a substantial responsibility in mitigating this threat. Holding them accountable requires a combination of legal reforms, ethical considerations, improved content moderation strategies, increased transparency, and robust government oversight. How can we effectively combat algorithm-driven radicalization and prevent future tragedies? The fight against algorithm-driven radicalization requires collective action – let's demand change from our tech giants and policymakers alike.

Featured Posts

-

Donald Trumps Viral Friend Is It Elon Musk

May 31, 2025

Donald Trumps Viral Friend Is It Elon Musk

May 31, 2025 -

Cycle News Magazine 2025 Issue 18 In Depth Coverage Of Cyclings Top Stories

May 31, 2025

Cycle News Magazine 2025 Issue 18 In Depth Coverage Of Cyclings Top Stories

May 31, 2025 -

Kpc News History And Current Events

May 31, 2025

Kpc News History And Current Events

May 31, 2025 -

Watchdog Proposes Price Caps And Vet Comparison Websites

May 31, 2025

Watchdog Proposes Price Caps And Vet Comparison Websites

May 31, 2025 -

Investigating The Link Between Algorithms Radicalization And Mass Shootings

May 31, 2025

Investigating The Link Between Algorithms Radicalization And Mass Shootings

May 31, 2025