Are Tech Companies Responsible When Algorithms Radicalize Mass Shooters?

Table of Contents

The Role of Algorithms in Online Radicalization

Algorithms, the invisible engines driving online content delivery, play a significant role in shaping online experiences. Their influence on radicalization is a growing concern.

Echo Chambers and Filter Bubbles

Algorithms create echo chambers and filter bubbles by prioritizing content that aligns with a user's existing beliefs. This reinforcement of extremist views limits exposure to diverse perspectives and fosters radicalization.

- Examples: YouTube's recommendation system guiding users down rabbit holes of extremist content; Facebook's newsfeed prioritizing posts from like-minded sources.

- Studies: Research consistently demonstrates a correlation between echo chamber effects and increased polarization, contributing to violent extremism.

- Mechanics: Recommendation systems often use collaborative filtering, analyzing user behavior to predict preferences, potentially amplifying extreme viewpoints.

Targeted Advertising and Propaganda

Algorithms facilitate the spread of targeted propaganda and extremist recruitment. Personalized advertising and content recommendations deliver extremist messages directly to susceptible individuals.

- Examples: Extremist groups utilizing Facebook and Instagram ads to reach specific demographics; tailored online recruitment strategies exploiting vulnerabilities.

- Effectiveness: Targeted advertising proves highly effective in disseminating extremist narratives and mobilizing individuals.

- Difficulty: Identifying and removing such content is a significant challenge due to the constant evolution of tactics and the sheer volume of online information.

The Spread of Misinformation and Conspiracy Theories

Algorithms amplify the spread of misinformation and conspiracy theories, often creating fertile ground for radicalization. False narratives can fuel anger, distrust, and a sense of injustice, contributing to violent extremism.

- Examples: The rapid dissemination of false narratives about election fraud and government conspiracies; algorithms boosting unfounded claims about marginalized groups.

- Impact: Misinformation significantly impacts the radicalization process by distorting reality and creating an environment conducive to violent ideologies.

- Challenges: Combating misinformation online requires a multifaceted approach, including media literacy initiatives, fact-checking, and improved algorithmic transparency.

Arguments for Tech Company Responsibility

Holding tech companies accountable for the content on their platforms is crucial when considering their role in enabling radicalization.

Legal and Ethical Obligations

Legal and ethical arguments support holding tech companies accountable for the harm caused by their algorithms. Their role in facilitating the spread of extremist content constitutes negligence or complicity.

- Legal Precedents: Existing laws on incitement to violence and aiding and abetting could be applied to tech companies.

- Ethical Frameworks: Ethical considerations demand responsibility for the societal impact of powerful technologies.

- Negligence/Complicity: Arguments can be made that tech companies are negligent in their failure to adequately moderate harmful content and complicit in facilitating radicalization.

The Profit Motive and Deregulation

The pursuit of profit often clashes with the responsibility to prevent the spread of harmful content. Insufficient regulation exacerbates the issue.

- Profits over Safety: Examples exist where companies prioritize user engagement and advertising revenue over safety and content moderation.

- Lobbying and Regulation: Tech companies' lobbying efforts often influence regulatory decisions, impacting content moderation efforts.

- Consequences: Insufficient content moderation leads to the proliferation of extremist content and increases the risk of real-world violence.

Arguments Against Tech Company Responsibility

Counterarguments exist, focusing on freedom of speech and the practical challenges of content moderation.

Freedom of Speech and Censorship Concerns

Concerns about censorship and the limitations of content moderation often arise. Defining and removing harmful content while respecting freedom of speech presents a significant challenge.

- First Amendment Rights: In the US, First Amendment protections for freedom of speech are a major consideration.

- Defining Harmful Content: Objectively defining and identifying harmful content is difficult and subjective, potentially leading to biased moderation.

- Bias in Moderation: Content moderation systems can inadvertently exhibit biases, disproportionately affecting certain groups or viewpoints.

The Difficulty of Moderation and Enforcement

Effectively moderating content at scale is incredibly challenging. The sheer volume of online information and the constant evolution of extremist tactics pose significant hurdles.

- Limitations of AI: AI-powered moderation tools are prone to errors and can be easily manipulated.

- Human Cost: Human content moderators face immense psychological strain and ethical dilemmas.

- Cat-and-Mouse Game: Extremists constantly adapt their tactics, making it a continuous struggle for platforms to stay ahead.

Conclusion

The question of whether tech companies bear responsibility when algorithms radicalize mass shooters is multifaceted and lacks easy answers. While the arguments for holding tech companies accountable highlight their role in enabling the spread of harmful content and the potential for negligence, counterarguments emphasize freedom of speech concerns and the immense challenges of content moderation. Understanding how algorithms contribute to mass shootings requires ongoing investigation and a multi-stakeholder approach involving tech companies, policymakers, researchers, and civil society. We must continue the conversation about the impact of algorithms on radicalization and explore solutions to hold tech companies accountable for online radicalization while safeguarding fundamental rights. Share your thoughts: How can we better address the complex relationship between technology, online radicalization, and corporate responsibility?

Featured Posts

-

Is Miley Cyrus Estranged From Billy Ray A Look At The Family Feud

May 31, 2025

Is Miley Cyrus Estranged From Billy Ray A Look At The Family Feud

May 31, 2025 -

The Nintendo Switch And Indie Games A Complex Relationship

May 31, 2025

The Nintendo Switch And Indie Games A Complex Relationship

May 31, 2025 -

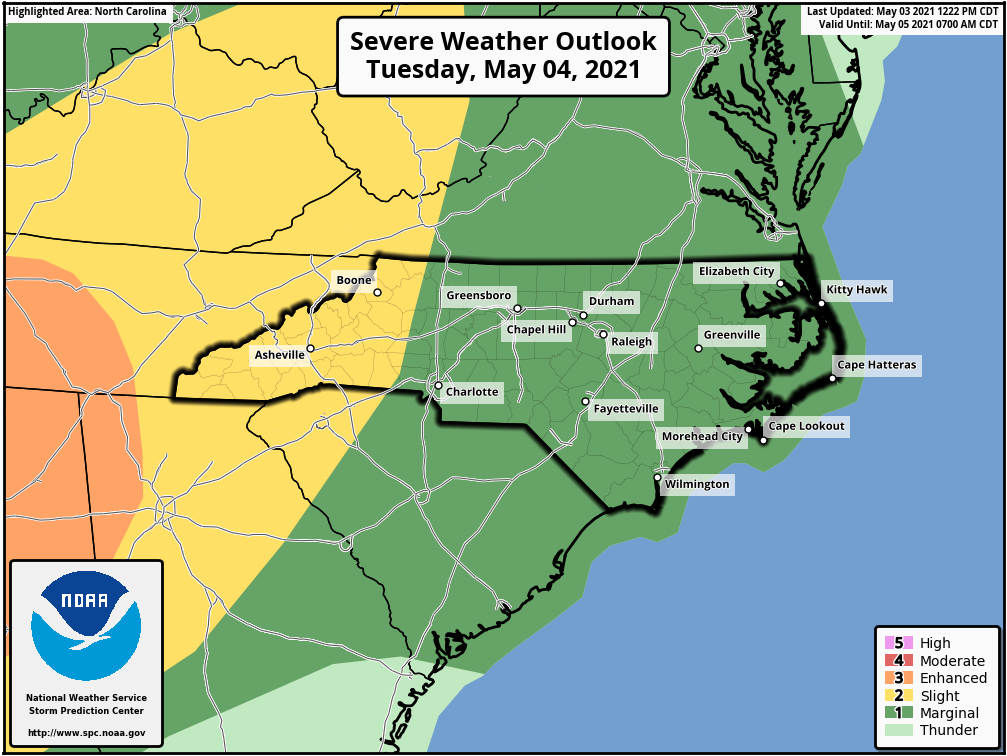

Severe Storms Possible Across Carolinas Tracking Active Vs Expired Weather Alerts

May 31, 2025

Severe Storms Possible Across Carolinas Tracking Active Vs Expired Weather Alerts

May 31, 2025 -

Top 10 Android Apps For Seamless Travel

May 31, 2025

Top 10 Android Apps For Seamless Travel

May 31, 2025 -

Who Addresses Growing Covid 19 Cases A New Variant Under Scrutiny

May 31, 2025

Who Addresses Growing Covid 19 Cases A New Variant Under Scrutiny

May 31, 2025