Building Voice Assistants Made Easy: OpenAI's 2024 Developer Announcements

Table of Contents

Simplified Natural Language Understanding (NLU) with OpenAI's New APIs

OpenAI's 2024 updates significantly improve the core of voice assistant functionality: Natural Language Understanding. This involves understanding what a user says and extracting the meaning. This is achieved through advancements in three key areas:

Improved Speech-to-Text Capabilities

- Higher accuracy: OpenAI's new speech-to-text API boasts a significantly lower Word Error Rate (WER), translating spoken words into text with greater precision.

- Support for multiple languages: Developers can now build voice assistants that cater to a global audience, with support for a wider range of languages and dialects.

- Reduced latency: The time it takes to process speech and generate text is dramatically reduced, leading to a more responsive and natural user experience.

- Improved handling of accents and background noise: The API is more robust in handling variations in accents and noisy environments, ensuring accurate transcription even in challenging conditions.

The new API is incredibly easy to integrate, using straightforward code examples provided in OpenAI's comprehensive documentation. For example, developers can now achieve a WER of less than 5% in many scenarios, a significant improvement over previous generations of speech-to-text technology. This ensures that your AI voice assistant accurately understands user commands, even in less-than-ideal conditions.

Enhanced Intent Recognition and Entity Extraction

Understanding what a user says is only half the battle; understanding why they're saying it is crucial for building effective voice assistants. OpenAI's improvements in intent recognition and entity extraction make this easier than ever:

- More robust handling of complex queries: The API can now understand nuanced and multi-part questions, providing more accurate responses even when users phrase their requests in complex ways.

- Better context understanding: The system maintains context across multiple turns of conversation, allowing for more natural and flowing interactions.

- Improved accuracy in identifying key information within user utterances: The API excels at extracting relevant entities (like dates, times, locations, and names) from user input, enabling your voice assistant to perform specific actions based on the information provided.

This means your voice assistant can understand requests like "Remind me to pick up dry cleaning at 3 PM next Tuesday at the Main Street Cleaners" with ease, accurately extracting all the relevant information to set the correct reminder.

Pre-trained Models for Faster Development

Building a voice assistant from scratch can be time-consuming and resource-intensive. OpenAI addresses this with pre-trained models for common voice assistant tasks:

- Availability of pre-trained models for common voice assistant tasks: OpenAI provides pre-trained models for tasks like setting reminders, playing music, answering questions based on knowledge bases, and more.

- Reduced training time and resources: Developers can leverage these pre-trained models as a starting point, significantly reducing the time and computational resources required for training custom models.

These pre-trained models provide a fantastic foundation, allowing you to focus on developing unique features and differentiating your voice assistant rather than spending months training a basic NLU model.

Streamlined Voice Synthesis and Output with OpenAI's Latest Technologies

A voice assistant is only as good as its output. OpenAI's advancements in voice synthesis technology create natural-sounding and customizable responses.

Natural-Sounding Text-to-Speech (TTS)

OpenAI's improved TTS capabilities provide a significant leap forward in creating realistic and engaging voice interactions:

- Improved intonation, emotion expression, and personalization options: The synthesized speech is now more expressive, conveying intonation and emotions more naturally, leading to a more human-like interaction.

- Support for diverse voices and languages: Developers can choose from a range of voices and languages to create a personalized and inclusive user experience.

The quality is so high that the difference between synthetic and human speech is barely noticeable, making the user experience significantly more engaging and natural.

Customizable Voice Profiles

Creating a unique brand identity or tailoring the voice assistant to specific user preferences is now easier than ever:

- Ability to create custom voice profiles for unique brand identities or specific user preferences: Developers can fine-tune the voice characteristics to match their brand or user base.

- Increased control over voice characteristics: This includes control over parameters like pitch, tone, and speaking rate, allowing developers to create truly unique and memorable voices.

This opens up a world of possibilities for branding and personalization, making your voice assistant stand out from the crowd.

Seamless Integration with Existing Platforms

OpenAI's tools are designed for easy integration into your existing ecosystem:

- Easy integration with popular platforms like Alexa, Google Assistant, and other smart home devices: OpenAI provides SDKs and APIs for seamless integration with major platforms, making it easy to incorporate your voice assistant into a wider range of devices and services.

This allows developers to quickly integrate their AI voice assistant solutions into popular smart home ecosystems, maximizing reach and user access.

Enhanced Developer Tools and Resources

OpenAI has also focused on providing developers with the resources they need to succeed:

Comprehensive Documentation and Tutorials

OpenAI has committed to making voice assistant development more accessible:

- Detailed API documentation: Clear and concise documentation provides developers with all the information they need to use the APIs effectively.

- Interactive tutorials: Step-by-step tutorials guide developers through the process of building voice assistants, from setup to deployment.

- Code examples: Numerous code examples provide practical demonstrations of how to use the APIs and build different functionalities.

- Community support forums: Developers can connect with each other and seek assistance from OpenAI's support team.

Improved Debugging and Monitoring Tools

Building any complex system requires robust debugging capabilities. OpenAI provides the tools to simplify the process:

- Real-time performance monitoring: Track the performance of your voice assistant in real-time, identifying and resolving issues quickly.

- Advanced debugging tools: Utilize advanced debugging tools to pinpoint and resolve errors efficiently.

- Improved error handling: Robust error handling mechanisms ensure that your voice assistant can gracefully handle unexpected situations.

Simplified Deployment and Scalability

Deploying and scaling your voice assistant is made effortless:

- Easy deployment to cloud platforms: Deploy your voice assistant to major cloud platforms with ease, utilizing their scalability and reliability.

- Scalable infrastructure to handle growing user bases: OpenAI's infrastructure is designed to scale to accommodate increasing user demand, ensuring that your voice assistant can handle a large number of concurrent users.

Conclusion

OpenAI's 2024 announcements significantly lower the barrier to entry for building voice assistants. The simplified APIs, enhanced tools, and improved resources empower developers of all skill levels to create innovative and user-friendly voice experiences. Don't miss out on this opportunity to revolutionize how users interact with technology. Start building your voice assistant today with OpenAI's cutting-edge tools and unlock the potential of voice interaction!

Featured Posts

-

Removing Your Personal Information Online A Practical Approach

Apr 23, 2025

Removing Your Personal Information Online A Practical Approach

Apr 23, 2025 -

Trumps Fda And Biotech A Positive Outlook

Apr 23, 2025

Trumps Fda And Biotech A Positive Outlook

Apr 23, 2025 -

Uskrs I Uskrsni Ponedjeljak Popis Trgovina S Radnim Vremenom

Apr 23, 2025

Uskrs I Uskrsni Ponedjeljak Popis Trgovina S Radnim Vremenom

Apr 23, 2025 -

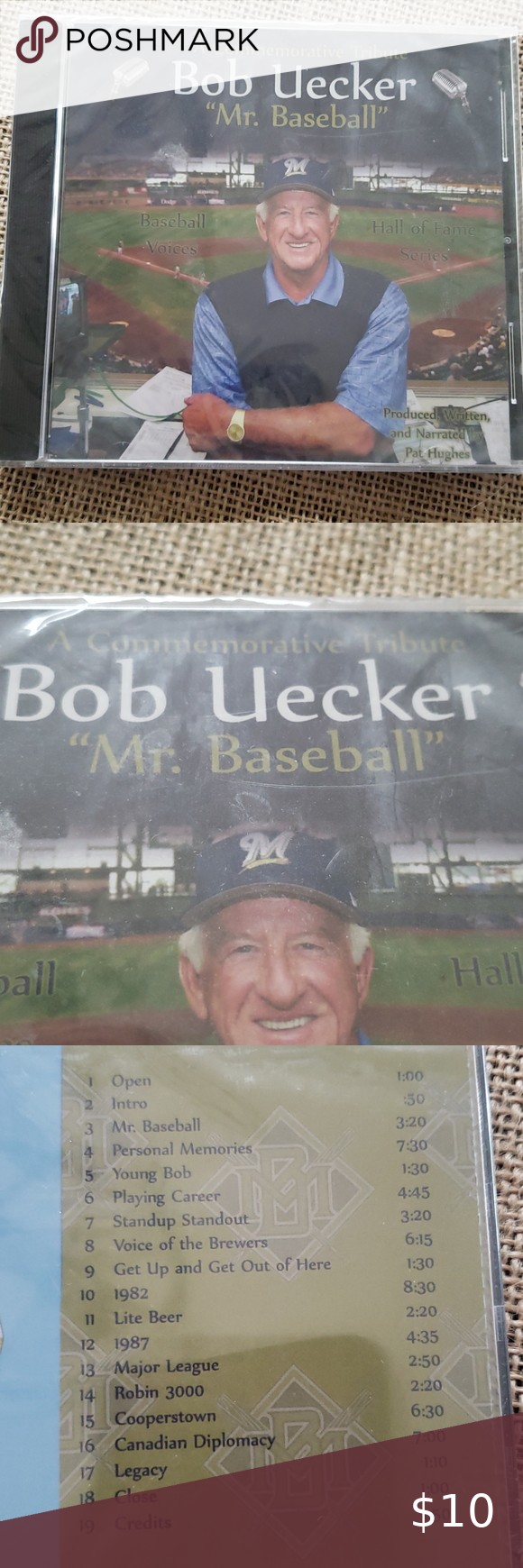

Provus Moving Tribute To Baseball Legend Bob Uecker

Apr 23, 2025

Provus Moving Tribute To Baseball Legend Bob Uecker

Apr 23, 2025 -

Mlb Player Props Expert Predictions For The Jazz Vs Steeltown Game

Apr 23, 2025

Mlb Player Props Expert Predictions For The Jazz Vs Steeltown Game

Apr 23, 2025