Building Voice Assistants Made Easy: OpenAI's Latest Tools

Table of Contents

Leveraging OpenAI's Powerful Language Models for Natural Language Understanding (NLU)

Building robust voice assistants hinges on effective Natural Language Understanding (NLU). OpenAI's cutting-edge language models, such as GPT-3 and Whisper, are revolutionizing this aspect of voice assistant development. These models excel at both speech-to-text conversion and interpreting the meaning behind spoken words, dramatically simplifying the complexities of NLU.

- Improved accuracy in transcribing speech, even in noisy environments: OpenAI's models demonstrate impressive robustness, accurately converting speech to text even with background noise or varying accents. This is crucial for creating voice assistants that work reliably in real-world conditions. This improved accuracy reduces the need for extensive noise cancellation preprocessing.

- Enhanced ability to interpret user intent and context: Beyond simple transcription, these models understand the meaning behind the user's words. They can discern intent, identify entities (like names, dates, and locations), and understand the context of a conversation, leading to more natural and helpful interactions. This contextual awareness is key to building truly intelligent voice assistants.

- Simplified integration with existing voice assistant frameworks: OpenAI's APIs are designed for easy integration with popular frameworks like Dialogflow, Amazon Lex, and Rasa, allowing developers to seamlessly incorporate these powerful language models into their existing projects. This simplifies the development process and reduces integration headaches.

- Reduced need for extensive custom training data: Pre-trained models like GPT-3 and Whisper come with a wealth of knowledge already embedded, significantly reducing the amount of custom training data required. This translates to substantial cost and time savings, accelerating the development lifecycle.

The benefits of using pre-trained models like GPT-3 and Whisper are undeniable. Compared to building custom models from scratch, these pre-trained options offer significant cost savings and dramatically reduced development time. This makes building voice assistants accessible to a much wider range of developers.

Streamlining Voice Assistant Development with OpenAI APIs

OpenAI provides a suite of powerful APIs that simplify the integration of speech recognition, natural language processing (NLP), and text-to-speech functionalities into your voice assistant projects. These APIs offer a streamlined and efficient approach to development, abstracting away much of the underlying complexity.

- Easy-to-use API documentation and examples: OpenAI offers comprehensive documentation and numerous code examples, making it easy to get started, even for developers new to these technologies. This reduces the learning curve and allows for quicker implementation.

- Support for multiple programming languages: The APIs support popular languages like Python, JavaScript, and others, giving developers flexibility in their choice of development environment. This caters to a wider developer community and promotes seamless integration into existing projects.

- Scalable infrastructure to handle high volumes of requests: OpenAI's infrastructure is designed to handle large volumes of requests, ensuring that your voice assistant can scale to meet growing user demands without performance issues. This scalability is essential for building voice assistants capable of handling a large user base.

- Integration with popular development platforms and frameworks: The APIs are designed to work seamlessly with popular platforms and frameworks, simplifying integration and reducing development time. This simplifies development and streamlines the integration process.

Here's a simple Python code snippet demonstrating API usage for transcribing audio using Whisper:

import openai

# ... (API key setup) ...

audio_file = open("audio.mp3", "rb")

transcript = openai.Audio.transcribe("whisper-1", audio_file)

print(transcript["text"])

This example shows how straightforward it is to leverage OpenAI's powerful capabilities.

Building Personalized Voice Assistant Experiences with OpenAI's Customization Options

While pre-trained models offer a strong foundation, OpenAI also provides options for customization, allowing developers to tailor their voice assistants to create unique and personalized experiences.

- Fine-tuning pre-trained models with custom datasets: You can fine-tune pre-trained models using your own datasets to enhance their performance on specific tasks or domains relevant to your voice assistant. This allows for greater accuracy and relevance in responses.

- Training models on specific domains or tasks: Focus training on a specific domain, such as medical advice or financial planning, to build a voice assistant with specialized knowledge. This allows for building highly specialized voice assistants catering to niche needs.

- Creating personalized responses and interactions: Customize the voice assistant's responses based on user preferences and past interactions, creating a more engaging and natural experience. This personalization creates a more engaging and user-friendly experience.

- Adapting the voice assistant's personality and tone: Fine-tune the model to reflect a specific personality or tone (e.g., formal, informal, humorous), further personalizing the interaction. This creates a distinct and memorable user experience.

OpenAI's Contribution to Voice Assistant Security and Privacy

Data privacy and security are paramount concerns when building voice assistants. OpenAI takes these issues seriously, implementing robust measures to protect user data:

- Data encryption and secure storage: User data is encrypted both in transit and at rest, ensuring confidentiality and preventing unauthorized access.

- Compliance with relevant data privacy regulations: OpenAI adheres to relevant regulations such as GDPR and CCPA, ensuring responsible handling of user information.

- Measures to prevent unauthorized access and misuse: OpenAI employs various security measures to protect against unauthorized access and prevent misuse of user data.

- Transparent data usage policies: OpenAI provides clear and transparent policies outlining how user data is collected, used, and protected.

Conclusion

OpenAI's latest tools are revolutionizing the way we build voice assistants. By providing powerful language models, user-friendly APIs, and extensive customization options, they've significantly lowered the barrier to entry for developers of all levels. The ability to leverage pre-trained models, streamline integration, and create personalized experiences makes building voice assistants more accessible and efficient than ever before. Start exploring OpenAI's resources today and begin your journey in creating the next generation of voice-enabled applications. Take advantage of this opportunity to build your own innovative voice assistant using OpenAI's powerful tools!

Featured Posts

-

Lionesses Vs Spain Where To Watch Kick Off Time And Tv Channel Information

May 02, 2025

Lionesses Vs Spain Where To Watch Kick Off Time And Tv Channel Information

May 02, 2025 -

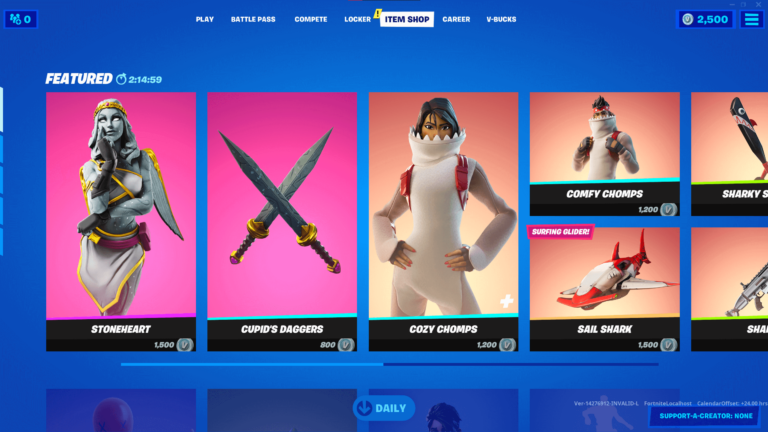

Fortnite Shop Update Fuels Player Anger And Frustration

May 02, 2025

Fortnite Shop Update Fuels Player Anger And Frustration

May 02, 2025 -

Secret Service Investigation Conclusion On White House Cocaine Incident

May 02, 2025

Secret Service Investigation Conclusion On White House Cocaine Incident

May 02, 2025 -

Alasylt Alshayet Hwl Blay Styshn 6

May 02, 2025

Alasylt Alshayet Hwl Blay Styshn 6

May 02, 2025 -

Hollywood Actress Priscilla Pointer Dies At 100 A Legacy Remembered

May 02, 2025

Hollywood Actress Priscilla Pointer Dies At 100 A Legacy Remembered

May 02, 2025