Do Algorithms Contribute To Mass Shooter Radicalization? A Critical Analysis

Table of Contents

H2: The Role of Algorithm-Driven Recommendation Systems

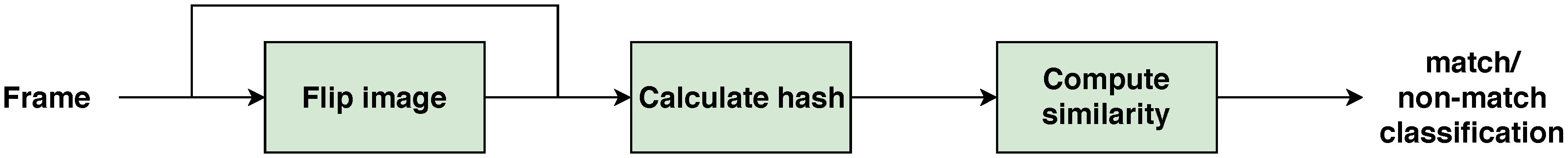

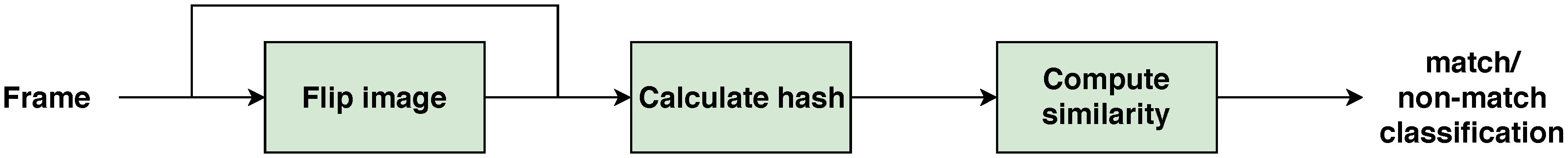

Algorithms power the content we see on social media platforms and search engines. These systems, designed to keep users engaged, can inadvertently contribute to the radicalization process.

H3: Echo Chambers and Filter Bubbles

Recommendation algorithms often create echo chambers and filter bubbles. These reinforce existing beliefs by predominantly showing users content aligning with their past interactions. This means someone exposed to extremist viewpoints might receive a constant stream of similar content, deepening their radicalization.

- YouTube's recommendation system has been criticized for suggesting extremist videos, creating a pathway to increasingly radical content.

- Facebook's newsfeed algorithm, while designed for personalization, can inadvertently amplify extremist groups and narratives by prioritizing engagement metrics.

- Twitter's trending topics and suggested accounts can expose users to extremist viewpoints, especially if their existing network already exhibits such tendencies.

- This algorithmic amplification of extreme views plays directly into confirmation bias, where individuals seek out information confirming their existing beliefs, further solidifying radicalization.

H3: Targeted Advertising and Extremist Propaganda

Algorithms also facilitate the targeting of individuals with extremist propaganda. Based on browsing history and online behavior, algorithms can deliver precisely tailored ads promoting hate speech, conspiracy theories, or violence.

- The ethical implications of targeted advertising in this context are immense, raising serious concerns about manipulation and the exploitation of vulnerabilities.

- Regulating such advertising is challenging, requiring complex legislation and international cooperation to combat cross-border dissemination of extremist material.

- The fine line between free speech and the promotion of harmful content is a central challenge in addressing this issue.

H2: The Spread of Misinformation and Conspiracy Theories

Algorithms prioritize engagement, often favoring sensational and emotionally charged content. This unintentionally accelerates the spread of misinformation and conspiracy theories, which can fuel extremist ideologies.

H3: Algorithmic Amplification of False Narratives

Sensationalist and misleading content receives algorithmic preference, meaning false narratives and conspiracy theories linked to mass shootings spread rapidly and widely.

- The rapid spread of conspiracy theories through social media, amplified by algorithms, has been observed in numerous cases related to mass violence. These theories can dehumanize victims and justify violence in the minds of susceptible individuals.

- Combating misinformation online requires a multi-pronged approach, including fact-checking initiatives, media literacy education, and platform accountability.

H3: Online Communities and Extremist Networks

Algorithms contribute to the formation and growth of online communities that foster extremist ideologies. These online spaces provide platforms for individuals to connect, radicalize each other, and plan acts of violence.

- Many online forums and groups promote extremist views, acting as incubators for radicalization. The ease with which these communities can be discovered and joined through algorithm-driven suggestions significantly contributes to their spread.

- Identifying and addressing these communities presents a significant challenge, balancing the protection of free speech with the prevention of violence.

H2: Counterarguments and Limitations

It's crucial to acknowledge that algorithms are not the sole cause of radicalization. Other factors contribute significantly.

H3: Individual Responsibility and Pre-existing Vulnerabilities

Individual factors, including mental health issues, personal experiences, and pre-existing vulnerabilities, play a significant role in radicalization. Algorithms are merely one element in a complex equation.

- Pre-existing biases and psychological vulnerabilities can make individuals more susceptible to extremist propaganda, regardless of algorithmic influence.

- Addressing mental health issues and providing support systems are crucial to preventing radicalization.

H3: The Difficulty of Causality

Establishing a direct causal link between algorithm use and mass shooter radicalization is extremely challenging.

- Human behavior is complex, influenced by a multitude of factors beyond algorithmic exposure.

- More robust research methodologies are needed to better understand the complex interplay between algorithms, online behavior, and acts of violence.

H2: Potential Solutions and Mitigation Strategies

Addressing the issue requires a multifaceted approach.

H3: Algorithmic Transparency and Accountability

Greater transparency in how algorithms function is vital, along with holding social media companies accountable for the content they promote.

- Regulatory frameworks and industry self-regulation initiatives are needed to ensure greater accountability for the algorithms that shape online experiences.

H3: Media Literacy and Critical Thinking Education

Equipping individuals with media literacy and critical thinking skills is crucial for identifying and resisting manipulative content.

- Investing in educational programs and initiatives that promote media literacy and critical thinking is crucial for a more resilient populace less susceptible to online manipulation.

H3: Improved Content Moderation and Detection

Advancements in content moderation technologies are needed to more effectively identify and remove extremist content.

- Balancing free speech principles with the urgent need to prevent violence remains a critical challenge in content moderation.

3. Conclusion

Algorithms and mass shooter radicalization are inextricably linked in a complex relationship. While algorithms can amplify extremist viewpoints and facilitate the spread of misinformation, they are not the sole driver of radicalization. Individual responsibility, pre-existing vulnerabilities, and societal factors also play critical roles. To address this complex issue effectively, we must focus on improving algorithmic transparency, promoting media literacy, enhancing content moderation, and tackling the broader societal factors that contribute to extremism. We must all engage in a continuous dialogue surrounding algorithms and mass shooter radicalization, advocating for policy changes and supporting initiatives that promote a safer online environment. Let's work together to understand and mitigate the risks posed by the convergence of algorithms and extremism.

Featured Posts

-

Us Energy Policy Expert Concerns Over Potential Price Hikes For Consumers

May 30, 2025

Us Energy Policy Expert Concerns Over Potential Price Hikes For Consumers

May 30, 2025 -

Tileoptiko Programma Savvatoy 15 Martioy

May 30, 2025

Tileoptiko Programma Savvatoy 15 Martioy

May 30, 2025 -

Could A Classic Nissan Car Be Revived

May 30, 2025

Could A Classic Nissan Car Be Revived

May 30, 2025 -

Rezkiy Rost Zabolevaemosti Koryu V Mongolii

May 30, 2025

Rezkiy Rost Zabolevaemosti Koryu V Mongolii

May 30, 2025 -

Ekstremnite Zhegi Prez 2024 G Nad Polovinata Ot Sveta E Zasegnat

May 30, 2025

Ekstremnite Zhegi Prez 2024 G Nad Polovinata Ot Sveta E Zasegnat

May 30, 2025