Is AI Therapy A Surveillance Tool In A Police State? Exploring The Risks

Table of Contents

Data Privacy Concerns in AI-Powered Mental Health Platforms

AI therapy platforms collect vast amounts of personal and sensitive data, raising significant data privacy concerns in the context of a potential police state. The very nature of these platforms, designed to understand our innermost thoughts and feelings, creates a fertile ground for misuse.

Data Collection and Storage Practices

AI therapy platforms gather extensive data, including:

- Conversations: Detailed transcripts of therapy sessions, revealing intimate details about an individual's life, relationships, and beliefs.

- Emotional Responses: Analysis of vocal tone, facial expressions, and other biometric data to gauge emotional states.

- Personal Details: Demographic information, medical history, and other personal identifiers.

The lack of transparency in how this data is used, coupled with the potential for data breaches, hacking, and insufficient data encryption, creates significant vulnerabilities. These vulnerabilities are amplified in scenarios where data protection laws are weak or unenforced, a common characteristic of police states.

Third-Party Access and Data Sharing

Concerns extend beyond the platform itself. The potential for data sharing with third parties, such as law enforcement agencies, insurance companies, or marketing firms, poses a significant risk to patient confidentiality. This sharing often occurs without informed consent, eroding the very foundation of trust necessary for effective mental healthcare. The data could be used for profiling and discrimination, targeting individuals based on their expressed thoughts and feelings.

The Role of Algorithms and Bias

Algorithms powering AI therapy platforms are not immune to bias. Biases embedded in these algorithms can lead to inaccurate or unfair assessments and treatments, disproportionately impacting marginalized communities. Lack of diversity in algorithm development teams contributes to this problem, perpetuating existing societal inequalities. In a police state context, biased algorithms could be weaponized to target specific groups.

The Potential for AI Therapy to Become a Tool of Repression in Authoritarian Regimes

The surveillance capabilities of AI therapy platforms are particularly concerning in authoritarian regimes. The intimate data collected could be used to identify and target individuals deemed "at risk" or dissidents.

Monitoring and Surveillance Capabilities

AI therapy platforms could be used to:

- Identify individuals expressing dissenting opinions: Sentiment analysis of therapy sessions could flag individuals expressing opposition to the government.

- Flag individuals for mental health issues based on their communications: Individuals expressing anxieties or frustrations could be wrongly labeled as unstable or a threat.

- Use of sentiment analysis to identify potential threats: Subjective interpretations of emotional data could lead to wrongful accusations and persecution.

Manipulation and Control through AI-Driven Interventions

AI therapy could be manipulated to subtly influence individuals' thoughts and behaviors. Suggestive prompts or feedback could reinforce pro-government narratives while suppressing dissent. This algorithmic intervention could be incredibly insidious and difficult to detect.

Lack of Accountability and Oversight

The limited regulations and oversight of AI therapy platforms exacerbate the risk of misuse by authorities. The absence of clear guidelines on data usage and access, coupled with the difficulty in enforcing existing regulations, necessitates stronger international cooperation on AI ethics.

Mitigating the Risks: Safeguarding AI Therapy from Surveillance Abuse

Mitigating the risks associated with AI therapy requires a multi-pronged approach focusing on data privacy, transparency, and ethical guidelines.

Enhancing Data Privacy and Security Protocols

Strengthening data protection requires:

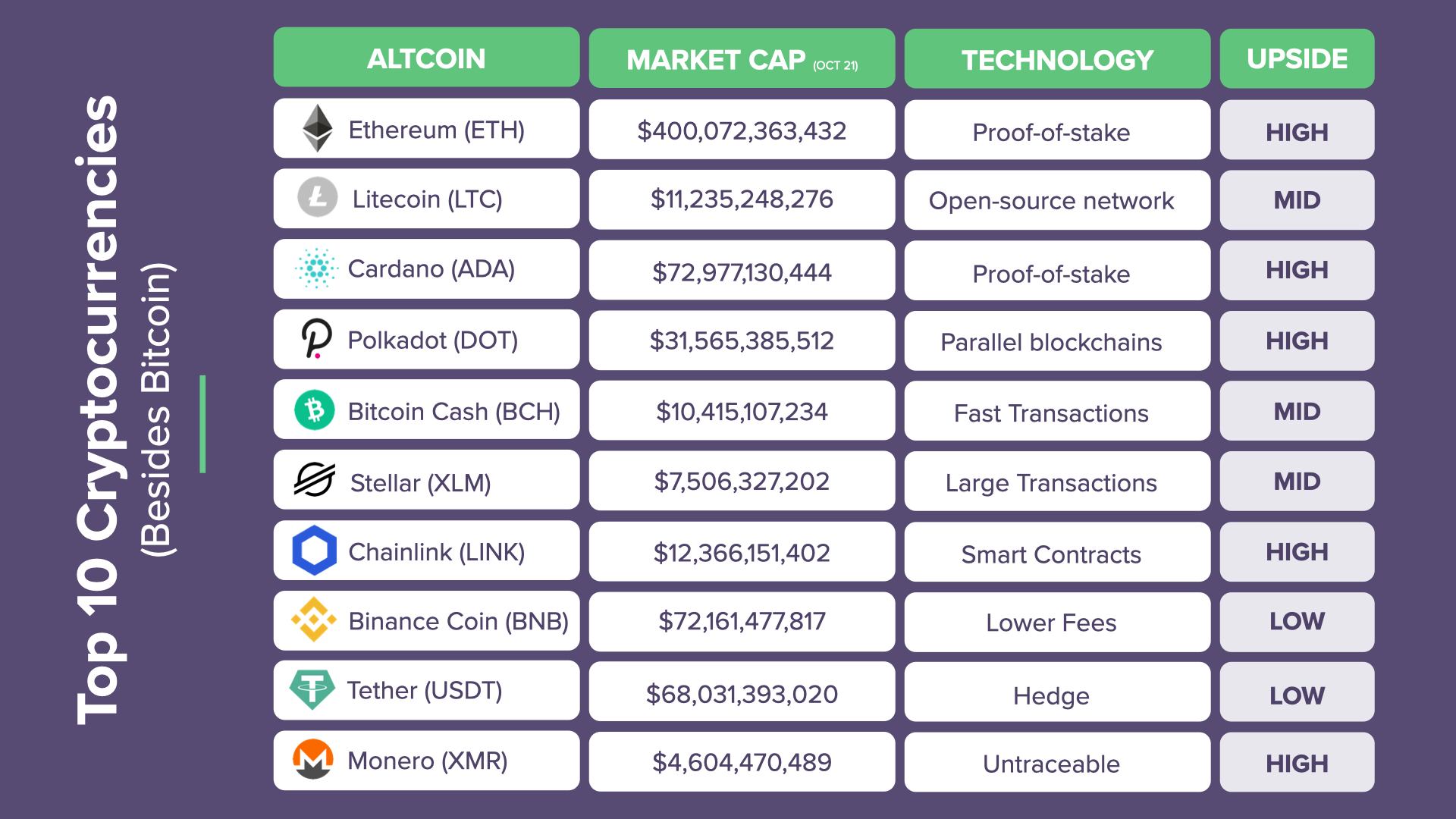

- Implementation of blockchain technology for secure data storage: Blockchain's decentralized nature enhances security and transparency.

- Development of robust data breach response plans: Proactive measures to minimize damage in case of breaches.

- Independent audits of AI therapy platforms: Regular audits to ensure compliance with data protection standards.

Promoting Transparency and User Control

Users must have greater control over their data:

- Clear and concise privacy policies: Easy-to-understand explanations of data collection and usage practices.

- User-friendly data management tools: Empowering users to access, correct, and delete their personal data.

- The right to access, correct, and delete personal data: Guaranteeing data subject rights.

Establishing Ethical Guidelines and Regulations

Strong ethical guidelines are crucial:

- International collaboration on AI ethics: Developing universal standards for responsible AI development and deployment.

- Establishment of independent regulatory bodies: Oversight to enforce ethical guidelines and regulations.

- Development of standardized certification processes for AI therapy platforms: Ensuring platforms meet ethical and security standards.

Conclusion

The potential for AI therapy to be used as a surveillance tool in a police state is a significant concern. Data privacy violations, algorithmic bias, and a lack of accountability are major risks. The future of AI therapy hinges on our collective commitment to responsible innovation. Let's actively participate in shaping a future where AI enhances mental health care without compromising our fundamental rights and freedoms. Let's continue the conversation on mitigating the risks of AI therapy surveillance in a police state, advocating for stronger data protection laws, ethical AI development practices, and increased transparency in the use of AI in mental healthcare.

Featured Posts

-

Rockies Aim To Snap 7 Game Losing Streak Against Padres

May 15, 2025

Rockies Aim To Snap 7 Game Losing Streak Against Padres

May 15, 2025 -

Tampa Bay Rays Defeat San Diego Padres Simpsons Three Hits Power The Sweep

May 15, 2025

Tampa Bay Rays Defeat San Diego Padres Simpsons Three Hits Power The Sweep

May 15, 2025 -

Predicting The Giants Padres Game Padres Victory Or Narrow Defeat

May 15, 2025

Predicting The Giants Padres Game Padres Victory Or Narrow Defeat

May 15, 2025 -

Ensuring Compliance For Crypto Exchanges In India A Step By Step Guide 2025

May 15, 2025

Ensuring Compliance For Crypto Exchanges In India A Step By Step Guide 2025

May 15, 2025 -

Jimmy Butler Leads Golden State Warriors To Win Over Houston Rockets

May 15, 2025

Jimmy Butler Leads Golden State Warriors To Win Over Houston Rockets

May 15, 2025

Latest Posts

-

Ohtanis Home Run Celebration A Gesture Of Support For A Teammate

May 15, 2025

Ohtanis Home Run Celebration A Gesture Of Support For A Teammate

May 15, 2025 -

Shohei Ohtanis Touching Home Run Celebration A Teammates Sweet Reason

May 15, 2025

Shohei Ohtanis Touching Home Run Celebration A Teammates Sweet Reason

May 15, 2025 -

Dodgers Farm System An In Depth Look At Kim Hope Miller And Phillips

May 15, 2025

Dodgers Farm System An In Depth Look At Kim Hope Miller And Phillips

May 15, 2025 -

Hyeseong Kim Zyhir Hope Evan Phillips Bobby Miller A Look At The Dodgers Future

May 15, 2025

Hyeseong Kim Zyhir Hope Evan Phillips Bobby Miller A Look At The Dodgers Future

May 15, 2025 -

Dodgers Minor League Standouts Kim Hope Phillips And Miller

May 15, 2025

Dodgers Minor League Standouts Kim Hope Phillips And Miller

May 15, 2025