Microsoft's Email Filter Blocks "Palestine": Employee Uprising And Censorship Concerns

Table of Contents

Employee Outrage and Internal Backlash

The news that Microsoft's internal email system was actively blocking the word "Palestine," along with related terms, triggered immediate and widespread outrage among employees. Many felt this action constituted unacceptable censorship, suppressing their ability to freely discuss a politically sensitive yet crucial topic. The employee reaction to Microsoft's email filter blocking "Palestine" quickly escalated, with reports of petitions, internal forum discussions, and social media posts expressing deep dissatisfaction and concern.

- Number of employees involved: While the exact number remains unclear, numerous reports suggest significant participation from across various departments.

- Specific examples of blocked emails: Anecdotal evidence suggests emails containing discussions of Palestinian human rights, political analysis related to Palestine, and even simple greetings in Arabic were flagged and blocked by the "Microsoft email filter."

- Links to relevant employee statements or internal communications: [Insert links to verifiable sources, if available. Otherwise, remove this bullet point.]

The intensity of the employee response underscores the deep-seated belief that Microsoft’s actions undermine principles of free speech and create a hostile work environment for employees who identify with or advocate for Palestinian rights. This event demonstrates the potential for internal dissent when corporate policies clash with employees' values.

Censorship Concerns and Freedom of Speech

Microsoft's action raises significant concerns about censorship and its chilling effect on freedom of speech. By automatically blocking the term "Palestine," the company is effectively limiting the ability of its employees to engage in discussions about a critical geopolitical issue. This raises questions about whether the filter constitutes a form of censorship and its potential to stifle political discourse within the company and beyond.

- Legal aspects of censorship in this context: The legal implications of such actions are complex and vary by jurisdiction. However, the potential for legal challenges based on principles of free speech and anti-discrimination laws cannot be ignored.

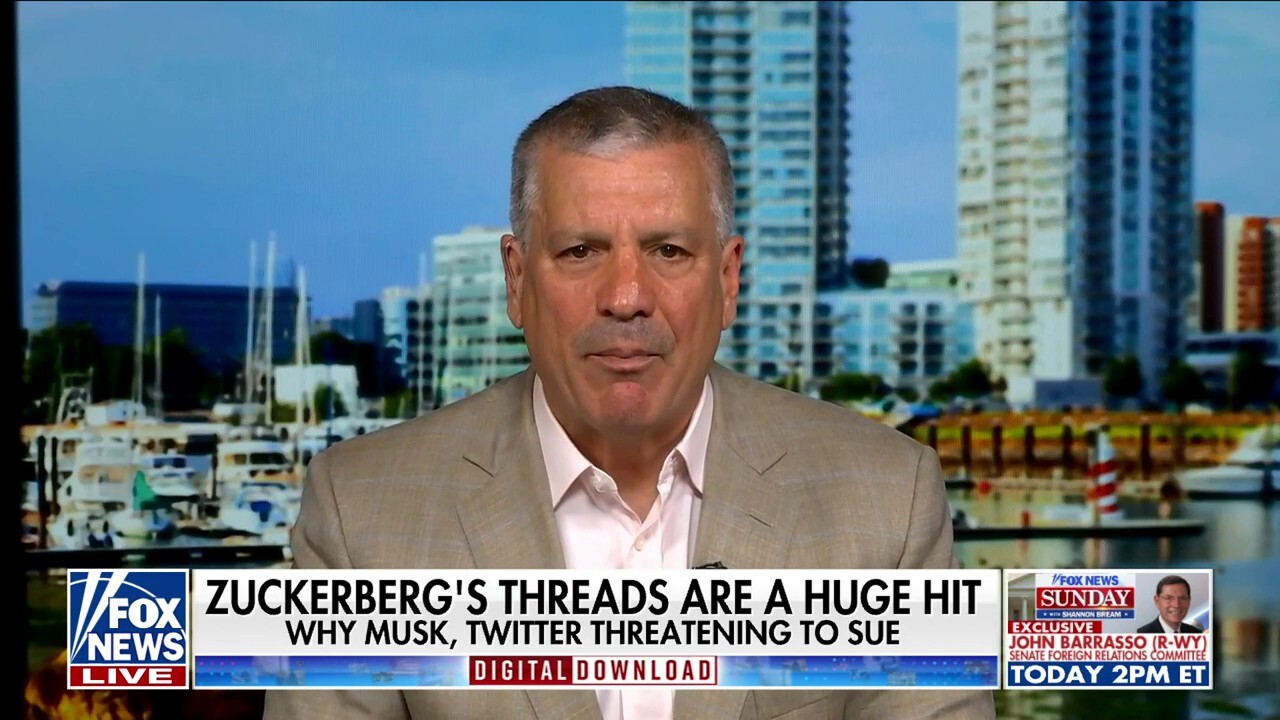

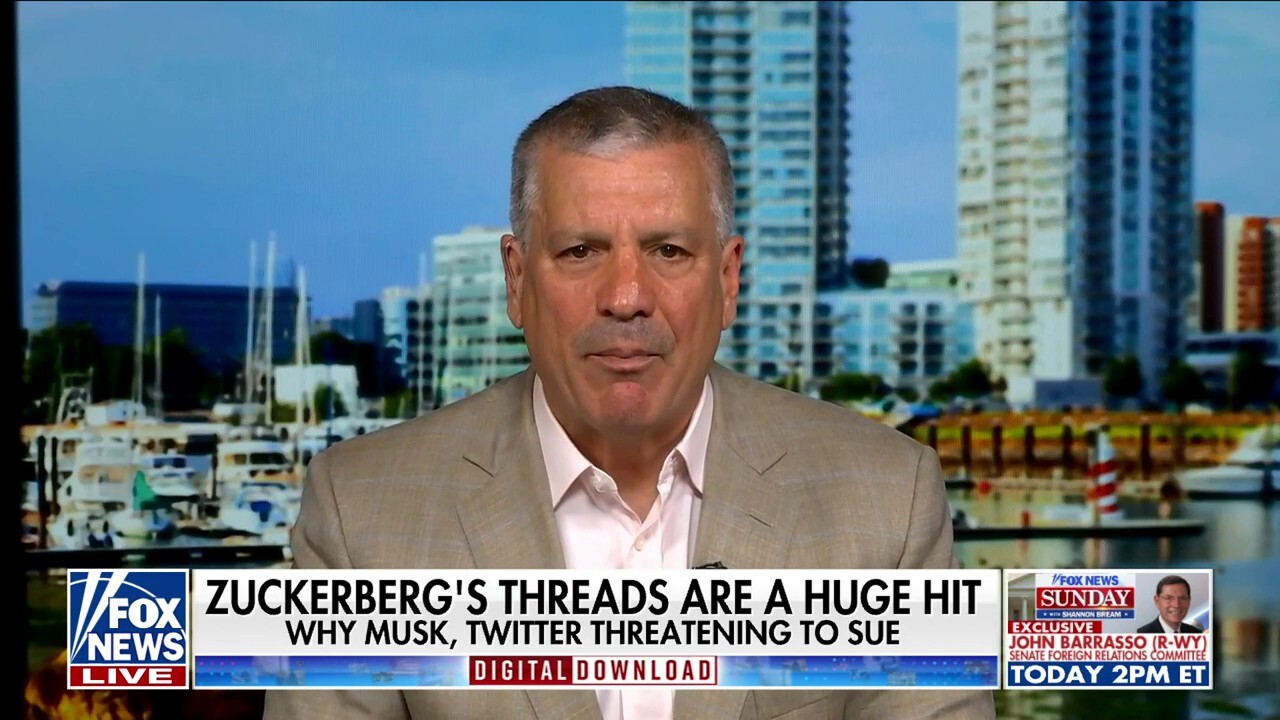

- Comparison with similar cases of tech censorship: This incident echoes other controversies surrounding tech companies' content moderation policies, highlighting the challenges of balancing automated systems with freedom of expression.

- Expert opinions on the ethical implications: Experts in ethics and technology law have widely criticized Microsoft's action, citing its potential to reinforce existing biases and silence marginalized voices.

Algorithmic Bias and Potential Discrimination

The incident strongly suggests the presence of algorithmic bias within Microsoft's email filtering system. The disproportionate targeting of "Palestine" compared to other geographical locations or conflict zones raises serious concerns about the fairness and neutrality of the algorithm. This raises further questions about the "Microsoft email filter" and its potential for unintended discrimination.

- Examples of potential biases in similar systems: Research has shown that algorithms used for content moderation often reflect and amplify existing societal biases, leading to unfair or discriminatory outcomes.

- Analysis of the filter's algorithm (if possible): If details about the algorithm's design and training data are made public, an independent analysis could reveal the specific biases at play. [Insert analysis if available].

- Calls for increased transparency and accountability in algorithm design: This incident underscores the urgent need for greater transparency and accountability in the design and deployment of algorithms used for content moderation.

Microsoft's Response and Future Implications

Microsoft's official response to the employee uprising and the concerns regarding the "Microsoft email filter blocks Palestine" issue has been [insert Microsoft's official response here]. [Insert direct quotes from Microsoft's statements.] This response should be critically assessed for its commitment to addressing algorithmic bias and ensuring freedom of expression within the company.

- Actions taken by Microsoft to address the issue: [Describe any actions taken, such as investigations, policy changes, etc.]

- Predictions for future policy changes: The long-term implications for Microsoft's reputation and employee morale depend on the company's commitment to addressing the root causes of this incident. The incident could lead to increased scrutiny of Microsoft's content moderation practices and may necessitate significant changes to its algorithms and internal policies.

Conclusion: Understanding the Impact of Microsoft's Email Filter Blocking "Palestine"

The controversy surrounding Microsoft's email filter blocking the term "Palestine" reveals a critical flaw in automated content moderation systems: the potential for algorithmic bias to stifle free speech and discriminate against certain groups. The employee uprising highlights the deep ethical concerns surrounding this issue and underscores the importance of transparency and accountability in the development and implementation of these systems. The "Microsoft email filter" incident, and the subsequent "Palestine censorship" accusations, serve as a stark reminder of the need for tech companies to prioritize human rights and freedom of expression over algorithmic efficiency. This case of "Palestine censorship" should prompt us all to demand greater transparency from tech companies regarding their content moderation policies and algorithmic biases. We must actively participate in advocating for change and ensuring that technology serves to empower, not silence, diverse voices. The future of responsible technology hinges on our collective ability to address these critical challenges presented by Microsoft's email filter and similar cases.

Featured Posts

-

Following Mottas Sacking Ten Hag In The Frame For Juventus

May 23, 2025

Following Mottas Sacking Ten Hag In The Frame For Juventus

May 23, 2025 -

Freddie Flintoffs Car Crash I Wish I D Died He Reveals

May 23, 2025

Freddie Flintoffs Car Crash I Wish I D Died He Reveals

May 23, 2025 -

Netflix Distributie De Oscar Intr Un Nou Serial Captivant

May 23, 2025

Netflix Distributie De Oscar Intr Un Nou Serial Captivant

May 23, 2025 -

Grand Ole Oprys International Debut Royal Albert Hall Date And Performers Announced

May 23, 2025

Grand Ole Oprys International Debut Royal Albert Hall Date And Performers Announced

May 23, 2025 -

Ser Aldhhb Fy Qtr Alywm Alithnyn 24 Mars 2024

May 23, 2025

Ser Aldhhb Fy Qtr Alywm Alithnyn 24 Mars 2024

May 23, 2025

Latest Posts

-

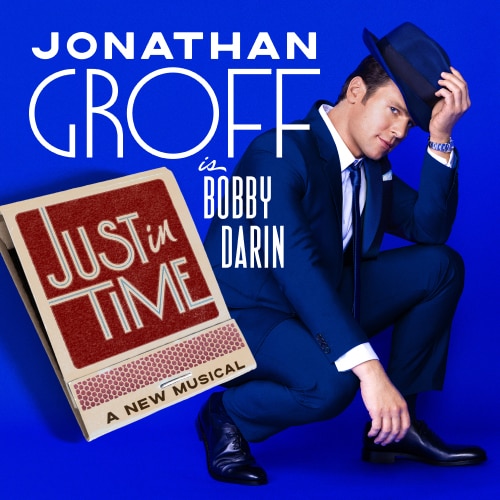

Jonathan Groff And The Tony Awards Little Shop Of Horrors And Its Impact

May 23, 2025

Jonathan Groff And The Tony Awards Little Shop Of Horrors And Its Impact

May 23, 2025 -

Jonathan Groffs Just In Time Photos From The Star Studded Broadway Opening

May 23, 2025

Jonathan Groffs Just In Time Photos From The Star Studded Broadway Opening

May 23, 2025 -

Jonathan Groffs Just In Time Opening A Star Studded Affair

May 23, 2025

Jonathan Groffs Just In Time Opening A Star Studded Affair

May 23, 2025 -

Jonathan Groff Could Little Shop Of Horrors Lead To A Historic Tony Win

May 23, 2025

Jonathan Groff Could Little Shop Of Horrors Lead To A Historic Tony Win

May 23, 2025 -

Jonathan Groffs Just In Time Broadway Show Cast Photos And More

May 23, 2025

Jonathan Groffs Just In Time Broadway Show Cast Photos And More

May 23, 2025