OpenAI Simplifies Voice Assistant Creation: Key Highlights From The 2024 Developer Event

Table of Contents

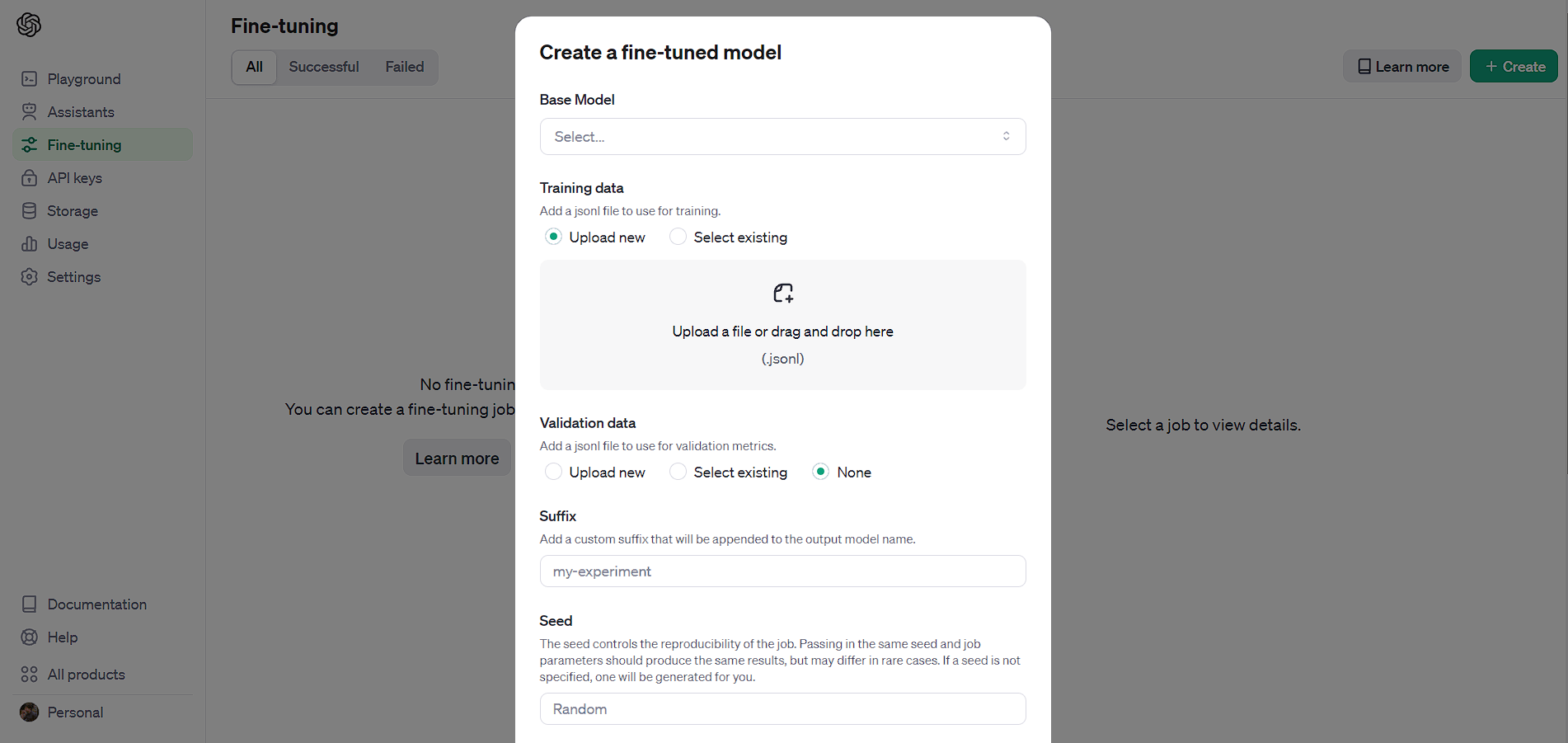

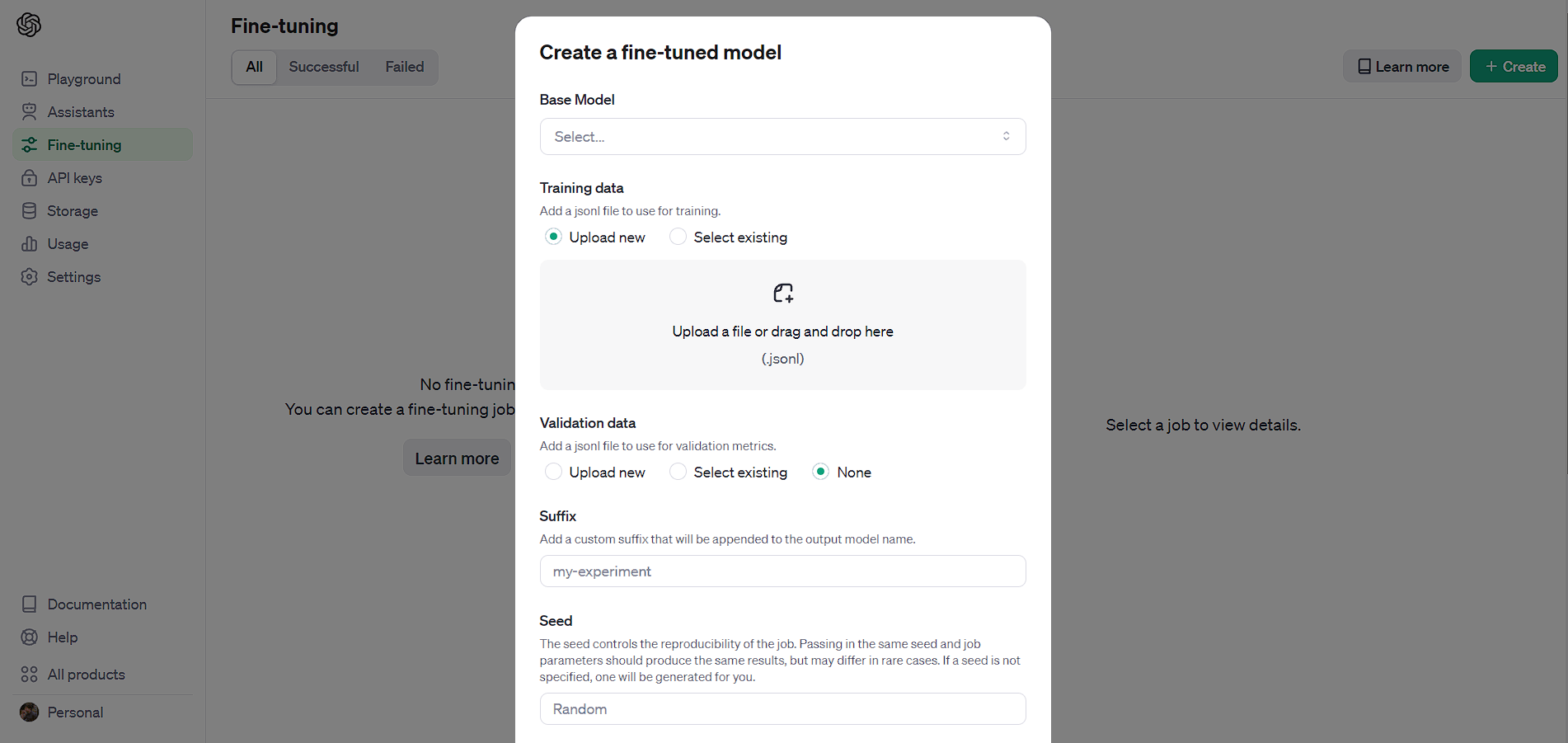

Streamlined Development Process with Pre-trained Models

OpenAI's pre-trained models are revolutionizing voice assistant development by significantly reducing the time, resources, and expertise required. These models, trained on massive datasets, offer developers a significant head start.

- Reduced need for extensive datasets: Developers no longer need to collect and annotate vast amounts of data to train their models from scratch. This dramatically reduces development time and costs.

- Faster training times: Pre-trained models drastically shorten the training process, allowing developers to iterate and deploy their voice assistants much faster.

- Improved accuracy out-of-the-box: OpenAI's pre-trained models provide high accuracy right from the start, requiring less fine-tuning for many applications. This is especially beneficial for developers with limited resources or expertise.

- Specific examples of pre-trained models: While specific model names weren't explicitly released at the time of the event, OpenAI hinted at significant improvements to their existing NLP models, particularly those optimized for conversational AI and speech recognition, making them ideal for voice assistant development.

This streamlined process is particularly beneficial for smaller development teams and startups with limited resources, allowing them to compete effectively with larger companies.

Enhanced Natural Language Understanding (NLU) Capabilities

The improvements in OpenAI's NLU capabilities are game-changing for voice assistant development. These advancements translate to more intuitive and responsive voice assistants.

- Improved intent recognition: OpenAI's models now exhibit a higher degree of accuracy in identifying the user's intent, even in ambiguous or complex queries.

- Better handling of complex queries: The models can better understand nuanced language, context, and multiple requests within a single utterance.

- Enhanced context awareness: Voice assistants built with OpenAI's technology can maintain context over longer conversations, leading to more natural and fluid interactions.

- Support for multiple languages: OpenAI is expanding its multilingual support, making it easier to build voice assistants accessible to a global audience.

These improvements directly translate to better user experiences, leading to increased user satisfaction and engagement. Users can expect more accurate responses, fewer misunderstandings, and a more natural conversational flow.

Improved Speech-to-Text and Text-to-Speech Technologies

OpenAI showcased significant advancements in both speech recognition (speech-to-text) and text-to-speech technologies, crucial components for any effective voice assistant.

- Higher accuracy in noisy environments: The models demonstrate improved robustness against background noise, making them suitable for real-world scenarios.

- Improved natural-sounding speech synthesis: The text-to-speech capabilities have been enhanced to produce more natural and expressive synthetic speech.

- Support for diverse accents and dialects: OpenAI is working towards greater inclusivity, ensuring its models can accurately process and generate speech from various accents and dialects.

- Reduced latency: The response time has been significantly reduced, ensuring a more seamless and immediate interaction for the user.

These advancements result in more seamless and natural interactions, making the voice assistant experience feel more intuitive and human-like.

Integration with Existing Platforms and Frameworks

OpenAI emphasized the ease of integrating its tools into existing developer workflows.

- API availability and ease of use: Well-documented APIs provide a straightforward way to incorporate OpenAI's capabilities into various applications.

- SDK support for popular development platforms: Support for popular platforms simplifies integration for developers already familiar with specific environments.

- Examples of successful integrations: OpenAI showcased several examples of successful integrations across different platforms and applications.

This seamless integration reduces development time and effort, allowing developers to focus on building the unique features of their voice assistants.

Addressing Ethical Considerations and Bias Mitigation

OpenAI highlighted its commitment to responsible AI development, acknowledging the ethical considerations surrounding voice assistants.

- Bias detection and mitigation techniques: OpenAI is actively developing and implementing techniques to detect and mitigate bias in its models.

- Data privacy and security measures: Robust security measures are in place to protect user data and privacy.

- Transparency in model development and deployment: OpenAI is committed to transparency throughout the model development and deployment process.

The responsible development of voice assistants is crucial to ensure fairness, equity, and user trust. OpenAI's commitment to these ethical considerations is a significant step forward.

Conclusion: Revolutionizing Voice Assistant Development with OpenAI

The 2024 OpenAI developer event showcased significant advancements that are revolutionizing voice assistant development. The introduction of pre-trained models, improved NLU capabilities, enhanced speech technologies, and a focus on ethical considerations simplify the creation process and make it more accessible to a wider range of developers. The benefits include faster development times, improved accuracy, enhanced user experiences, and seamless integration with existing platforms.

Ready to revolutionize your voice assistant development? Explore OpenAI's resources today and unlock the potential of simplified voice assistant creation!

Featured Posts

-

Love Monster Dynamics How To Identify And Overcome Relationship Issues

May 21, 2025

Love Monster Dynamics How To Identify And Overcome Relationship Issues

May 21, 2025 -

Optimaliseer Uw Verkoop Van Abn Amro Kamerbrief Certificaten

May 21, 2025

Optimaliseer Uw Verkoop Van Abn Amro Kamerbrief Certificaten

May 21, 2025 -

Understanding The Kartels Impact On Rum Culture A Stabroek News Analysis

May 21, 2025

Understanding The Kartels Impact On Rum Culture A Stabroek News Analysis

May 21, 2025 -

No Es El Arandano Descubre El Superalimento Para Un Envejecimiento Saludable

May 21, 2025

No Es El Arandano Descubre El Superalimento Para Un Envejecimiento Saludable

May 21, 2025 -

Remont Pivdennogo Mostu Pidryadniki Byudzheti Ta Khronologiya Proektu

May 21, 2025

Remont Pivdennogo Mostu Pidryadniki Byudzheti Ta Khronologiya Proektu

May 21, 2025

Latest Posts

-

Beiers Double Propels Borussia Dortmund Past Mainz

May 21, 2025

Beiers Double Propels Borussia Dortmund Past Mainz

May 21, 2025 -

Germanys 5 4 Aggregate Victory Sends Them To Uefa Nations League Final Four

May 21, 2025

Germanys 5 4 Aggregate Victory Sends Them To Uefa Nations League Final Four

May 21, 2025 -

Bundesliga Leverkusen Victory Delays Bayerns Championship Celebrations Kane Sidelined

May 21, 2025

Bundesliga Leverkusen Victory Delays Bayerns Championship Celebrations Kane Sidelined

May 21, 2025 -

Germany Edges Italy To Secure Uefa Nations League Final Four Spot

May 21, 2025

Germany Edges Italy To Secure Uefa Nations League Final Four Spot

May 21, 2025 -

Borussia Dortmunds Win Over Mainz Fueled By Beiers Two Goals

May 21, 2025

Borussia Dortmunds Win Over Mainz Fueled By Beiers Two Goals

May 21, 2025