OpenAI's ChatGPT: The FTC Investigation And Future Of AI Development

Table of Contents

The FTC Investigation: Concerns and Allegations

The FTC's investigation into ChatGPT stems from growing concerns about the potential societal impact of powerful AI technologies. The agency is examining whether OpenAI's practices violate consumer protection laws, focusing on several key areas:

-

Potential violations of consumer protection laws: The FTC is investigating whether ChatGPT's outputs, particularly inaccurate or misleading information, could constitute unfair or deceptive practices under consumer protection laws. This includes potential harm caused by relying on inaccurate information generated by the model.

-

Concerns about data privacy and security: The vast amounts of data used to train ChatGPT raise concerns about data privacy and security. The FTC is likely investigating OpenAI's data collection practices, data storage methods, and the protection of user information. The question of whether user data is appropriately anonymized and secured is central to this concern.

-

Misinformation and the spread of false content: ChatGPT's ability to generate realistic-sounding but entirely fabricated information poses a significant risk of spreading misinformation and disinformation. The FTC is likely evaluating OpenAI's efforts to mitigate the risk of its technology being used to create and disseminate false content. This includes examining methods to detect and flag potentially harmful outputs.

-

Algorithmic bias and discrimination: Like many AI systems, ChatGPT is trained on massive datasets that may reflect existing societal biases. The FTC's investigation likely includes an examination of whether ChatGPT exhibits bias in its outputs, potentially leading to discriminatory outcomes. Identifying and mitigating algorithmic bias is a crucial aspect of responsible AI development.

-

Lack of transparency in data collection and usage: The FTC is likely scrutinizing the transparency of OpenAI's data collection and usage practices. Concerns center on whether users are adequately informed about how their data is collected, used, and protected. Increased transparency is vital for building trust and ensuring compliance with data protection regulations.

Impact on OpenAI and the AI Industry

The FTC investigation carries significant implications for OpenAI and the broader AI industry. Potential consequences for OpenAI could include substantial fines, restrictions on its operations, mandatory changes to its business practices, and damage to its reputation. Beyond OpenAI, this investigation has a ripple effect:

-

Increased regulatory scrutiny across the AI sector: The FTC's action signals a growing trend of increased regulatory oversight of AI technologies across the board. Other companies developing and deploying AI systems should expect heightened scrutiny of their practices.

-

Pressure on other AI companies to improve their practices: The investigation is putting pressure on other AI companies to proactively address concerns about data privacy, algorithmic bias, and the spread of misinformation. This could lead to a wider adoption of ethical AI development practices.

-

Potential slowing of AI innovation due to increased regulation: While necessary, increased regulation could potentially slow down the pace of AI innovation. Balancing responsible innovation with regulatory compliance is a crucial challenge for the industry.

-

Shift in focus toward ethical AI development and responsible innovation: The investigation is likely to accelerate the industry's focus on ethical AI development, prompting companies to prioritize responsible innovation and incorporate ethical considerations into their processes.

The Future of AI Development in Light of the Investigation

The FTC investigation will likely reshape the landscape of AI development, leading to a greater emphasis on several key areas:

-

Increased emphasis on explainable AI (XAI): The investigation highlights the need for more transparency in AI systems. XAI aims to make AI decision-making processes more understandable and interpretable, increasing accountability and trust.

-

Development of more robust AI safety protocols: The investigation underscores the importance of developing robust safety protocols to mitigate the risks associated with AI systems, such as the spread of misinformation and algorithmic bias.

-

Strengthening of data privacy and security measures: The investigation will likely lead to stricter data privacy and security regulations and encourage the development of more secure AI systems.

-

Greater transparency and accountability in AI systems: There will likely be a greater demand for transparency and accountability in AI systems, ensuring that developers are responsible for the outputs and potential societal impacts of their technologies.

-

The rise of AI ethics boards and guidelines: The investigation may spur the creation of more AI ethics boards and guidelines to promote responsible AI development and deployment, establishing industry-wide standards and best practices.

Navigating the Regulatory Landscape: Best Practices for AI Developers

AI developers must proactively adapt to the evolving regulatory landscape. Here are some best practices:

-

Prioritizing data privacy and security: Implement robust data privacy and security measures, complying with all relevant regulations (like GDPR and CCPA).

-

Implementing robust bias detection and mitigation strategies: Actively identify and mitigate algorithmic bias in AI systems, ensuring fairness and equity in outcomes.

-

Ensuring transparency and explainability in AI systems: Design AI systems that are transparent and explainable, allowing users to understand how decisions are made.

-

Establishing clear ethical guidelines for AI development and deployment: Develop and adhere to clear ethical guidelines that prioritize responsible innovation and minimize potential harm.

-

Proactive engagement with regulators: Engage with regulators early and openly to understand and comply with evolving regulations.

Conclusion

The FTC's investigation into OpenAI's ChatGPT serves as a crucial turning point in the development and regulation of AI. While the investigation highlights significant concerns regarding consumer protection, data privacy, and algorithmic bias, it also presents an opportunity for the industry to prioritize ethical considerations and responsible innovation. The future of AI development hinges on establishing clear guidelines, fostering transparency, and ensuring the responsible deployment of these powerful technologies. By prioritizing ethical AI practices and proactively addressing potential risks, developers can contribute to a future where ChatGPT and similar AI systems benefit society while minimizing potential harms. Stay informed about developments in ChatGPT regulation and prioritize responsible AI development.

Featured Posts

-

Aaron Judges 2025 Goal The Push Up Revelation

Apr 28, 2025

Aaron Judges 2025 Goal The Push Up Revelation

Apr 28, 2025 -

The Changing Face Of X A Deep Dive Into The Recent Debt Sale Financials

Apr 28, 2025

The Changing Face Of X A Deep Dive Into The Recent Debt Sale Financials

Apr 28, 2025 -

Tylor Megills Success With The Mets Pitching Strategies And Key To His Strong Performance

Apr 28, 2025

Tylor Megills Success With The Mets Pitching Strategies And Key To His Strong Performance

Apr 28, 2025 -

Tiga Warna Baru Jetour Dashing Sorotan Di Iims 2025

Apr 28, 2025

Tiga Warna Baru Jetour Dashing Sorotan Di Iims 2025

Apr 28, 2025 -

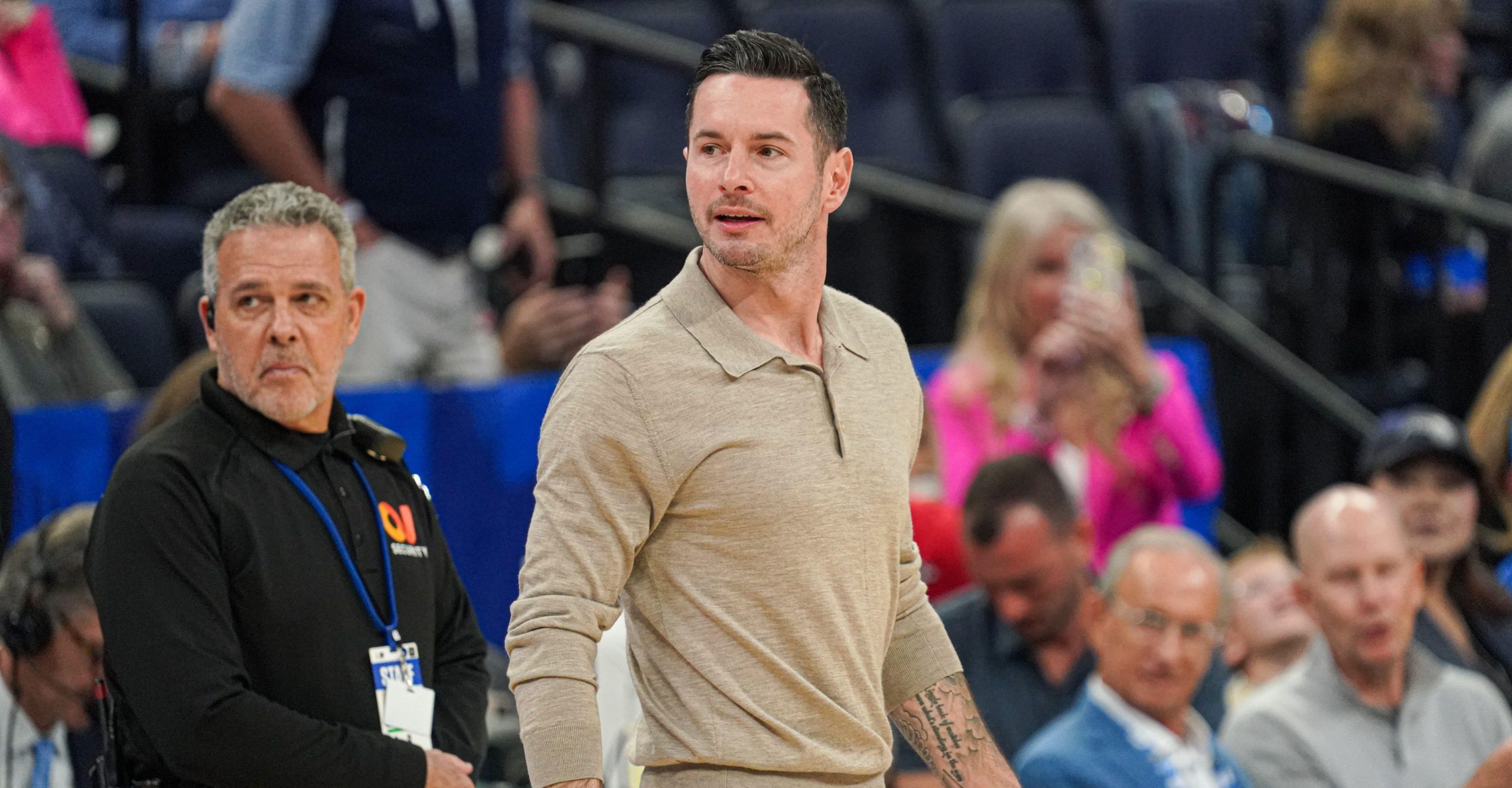

Redick Praises Espn For Jeffersons Role

Apr 28, 2025

Redick Praises Espn For Jeffersons Role

Apr 28, 2025