Tech Companies And Mass Shootings: The Unseen Influence Of Radicalizing Algorithms

Table of Contents

The Role of Social Media Algorithms in Echo Chambers and Filter Bubbles

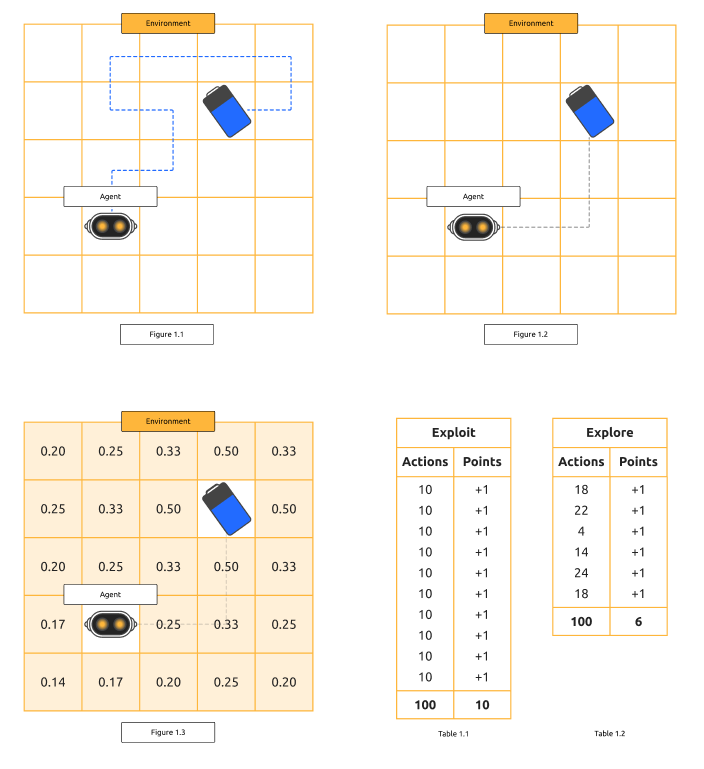

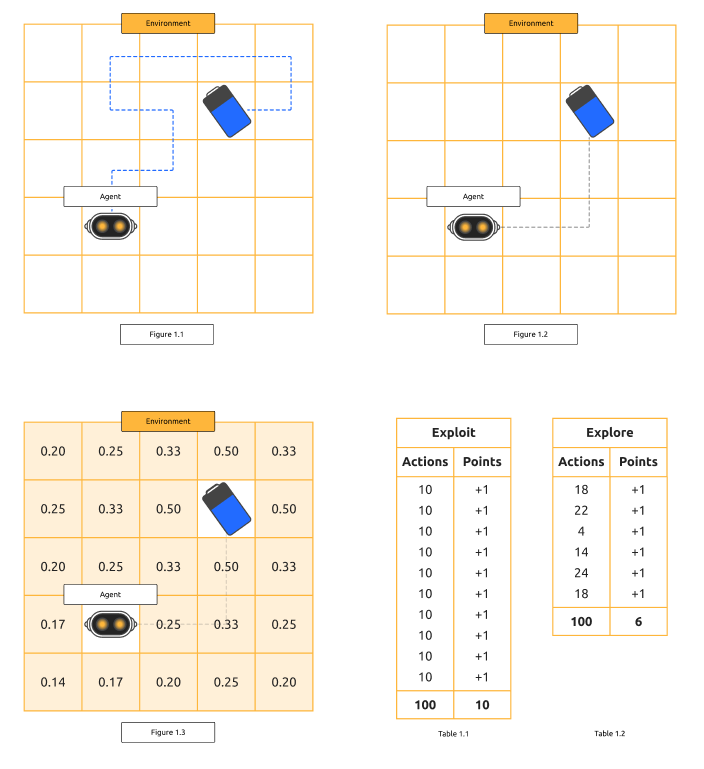

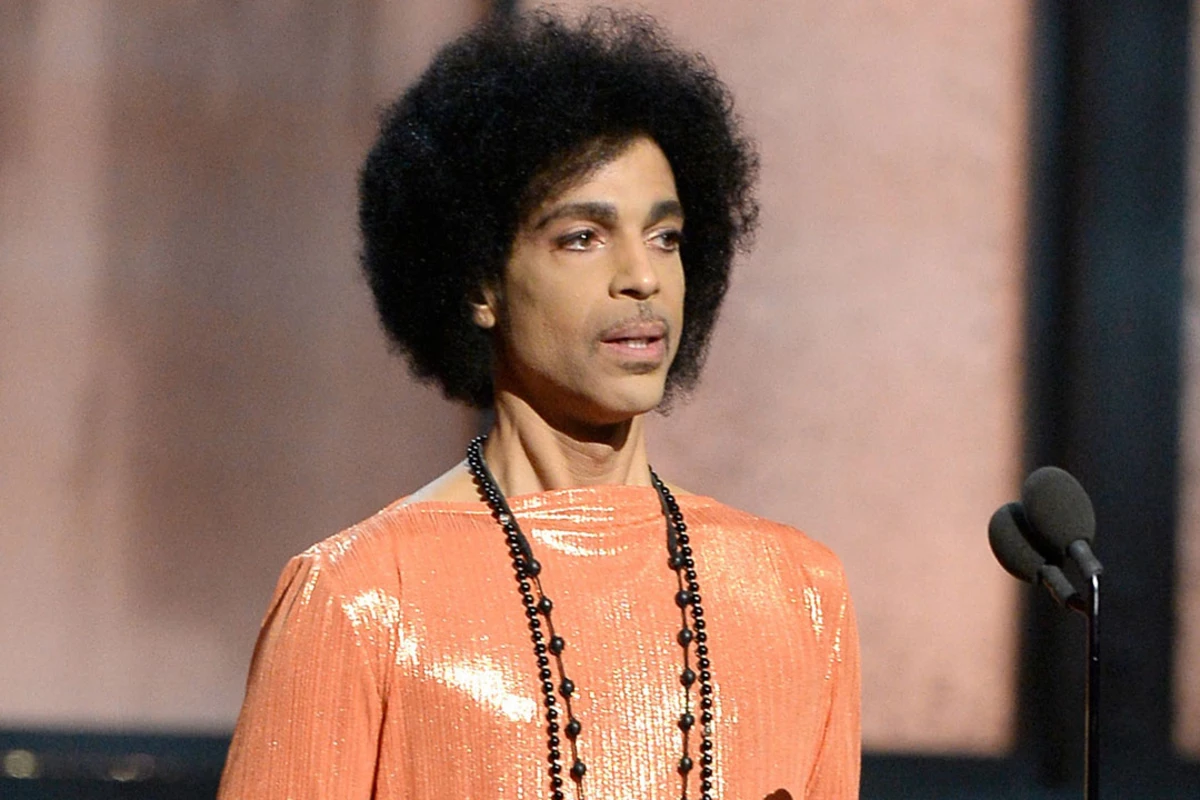

Social media algorithms, designed to maximize user engagement, can inadvertently create environments conducive to radicalization. These algorithms play a significant role in shaping online experiences through echo chambers and filter bubbles.

Echo Chambers and their Amplifying Effect

Algorithms prioritize content aligning with users' pre-existing beliefs, creating echo chambers. Within these echo chambers, extremist views are repeatedly reinforced, leading to increased polarization and radicalization.

- Examples: Algorithms on platforms like YouTube and Facebook recommending extremist videos or groups based on prior viewing history.

- Studies: Research consistently demonstrates a link between echo chamber effects and increased susceptibility to conspiracy theories and violent ideologies. Confirmation bias, the tendency to favor information confirming existing beliefs, is amplified within these echo chambers.

- Impact: The constant reinforcement of extremist viewpoints can lead to the normalization and even glorification of violence.

Filter Bubbles and Limited Exposure to Diverse Perspectives

Filter bubbles, a related phenomenon, limit users' exposure to diverse perspectives and counter-narratives. This algorithmic curation restricts critical thinking and challenges to extremist beliefs.

- Examples: Personalized newsfeeds showing only content aligned with a user's political leaning, creating an insular worldview.

- Impact: The lack of exposure to alternative viewpoints can reinforce biases and prevent individuals from questioning extremist ideologies. Algorithmic personalization, while seemingly beneficial, can contribute to the isolation of users within their own ideological bubbles.

- Role of Newsfeeds: The design of personalized newsfeeds, optimized for engagement, often prioritizes sensational and emotionally charged content, potentially increasing exposure to harmful material.

The Spread of Misinformation and Hate Speech Through Algorithmic Amplification

The pursuit of maximizing engagement often leads algorithms to prioritize sensational and inflammatory content, regardless of its veracity. This algorithmic amplification significantly contributes to the spread of misinformation and hate speech.

Algorithmic Promotion of Extremist Content

Algorithms reward content that elicits strong emotional responses, inadvertently boosting the reach of extremist materials. This prioritization of engagement over factual accuracy creates a fertile ground for radicalization.

- Examples: Algorithms promoting videos containing hate speech or conspiracy theories due to high viewership and engagement.

- Challenges of Content Moderation: The sheer volume of content uploaded daily makes effective content moderation incredibly challenging, allowing extremist groups to exploit loopholes and evade detection.

- Impact of Clickbait: Clickbait and emotionally charged headlines further exacerbate this problem, driving users towards harmful content.

The Difficulty of Content Moderation and the Arms Race Between Extremists and Platforms

Tech companies face a constant struggle against those who deliberately exploit algorithms to disseminate harmful ideologies. This is an ongoing arms race, with extremists constantly developing new tactics to circumvent content moderation efforts.

- Cat-and-Mouse Game: Extremist groups employ sophisticated techniques to mask their content and evade detection, forcing platforms to constantly adapt their strategies.

- Limitations of Current Strategies: Current content moderation methods, relying heavily on keyword filtering and human review, are often insufficient to combat the sophisticated techniques used by extremist groups.

- Need for Proactive Solutions: More proactive and effective solutions are needed, potentially involving artificial intelligence and machine learning techniques to identify and address extremist content more effectively.

The Impact of Algorithmic Bias and its Contribution to Radicalization

Algorithmic bias, stemming from biased data sets and design choices, can disproportionately expose certain groups to extremist content. This bias exacerbates existing social inequalities and contributes to radicalization.

Bias in Algorithmic Design and Data Sets

Algorithms are trained on vast datasets, which may reflect existing societal biases. These biases can be amplified and perpetuated through algorithmic recommendations and content moderation decisions.

- Examples: Algorithms that disproportionately recommend extremist content to users from marginalized communities due to biases in the training data.

- Importance of Diverse Datasets: The use of diverse and representative datasets in algorithm training is crucial to mitigate bias and promote fairness.

- Need for Algorithmic Audits: Regular audits and transparency regarding algorithmic processes are vital to identify and address bias.

The Lack of Accountability and Regulation

The lack of sufficient accountability and regulation for tech companies in addressing algorithmic radicalization poses a significant challenge. Greater governmental intervention and collaboration are necessary to tackle this issue.

- Challenges of Regulating Online Content: Regulating online content without infringing on freedom of speech is a complex task requiring careful consideration.

- Need for Government Intervention: Increased government oversight and collaboration with tech companies are necessary to establish clear guidelines and standards for algorithmic design and content moderation.

- Ethical Considerations: Ethical considerations must be at the forefront of algorithm design, ensuring that these systems do not inadvertently contribute to harm.

Conclusion

Algorithms, while not the sole cause, can significantly contribute to online radicalization by creating echo chambers, amplifying extremist content, and exhibiting bias. This poses a serious challenge to tech companies and regulators. Can we effectively combat the unseen influence of radicalizing algorithms and mitigate their potential contribution to mass shootings? The fight against mass shootings requires a multifaceted approach, and confronting the unseen influence of radicalizing algorithms is a crucial step forward. We must demand greater transparency and accountability from tech companies regarding their role in preventing the spread of radicalizing content, prioritizing ethical algorithm design, robust content moderation strategies, and promoting media literacy.

Featured Posts

-

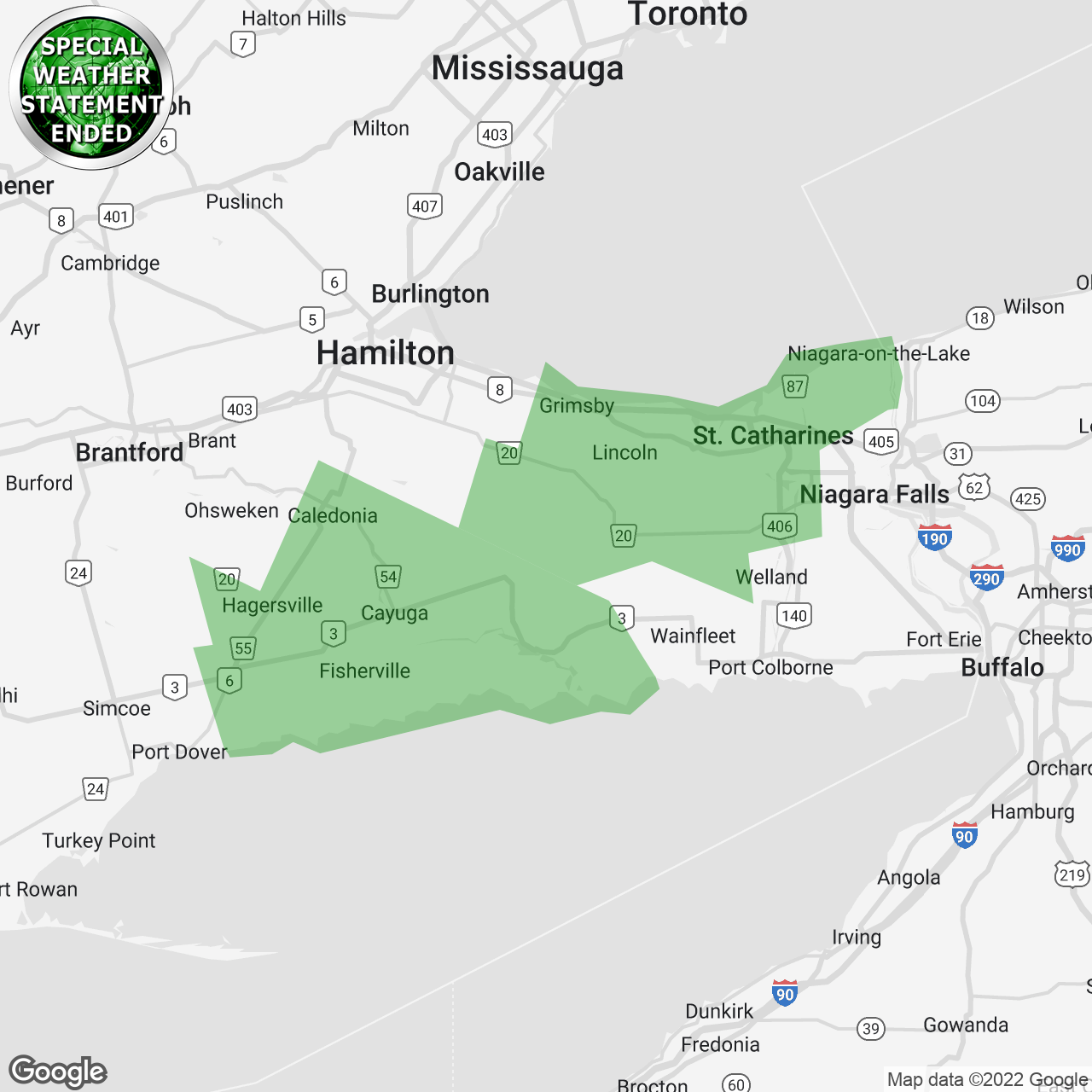

Today In History March 26th Remembering Prince And The Fentanyl Report

May 31, 2025

Today In History March 26th Remembering Prince And The Fentanyl Report

May 31, 2025 -

Bomberos Forestales Luchan Contra Incendio En Constanza Densa Humo Afecta Residentes

May 31, 2025

Bomberos Forestales Luchan Contra Incendio En Constanza Densa Humo Afecta Residentes

May 31, 2025 -

Increased Fire Risk Prompts Special Weather Statement For Cleveland Akron

May 31, 2025

Increased Fire Risk Prompts Special Weather Statement For Cleveland Akron

May 31, 2025 -

Kontuziyata Na Grisho V Rolan Garos Kakvo Se Sluchi I Kakvo Sledva

May 31, 2025

Kontuziyata Na Grisho V Rolan Garos Kakvo Se Sluchi I Kakvo Sledva

May 31, 2025 -

Munguia Faces Doping Accusations A Denial And Explanation

May 31, 2025

Munguia Faces Doping Accusations A Denial And Explanation

May 31, 2025