The Dark Side Of AI Therapy: Surveillance And The Erosion Of Privacy

Table of Contents

Data Collection and its Implications for AI Therapy Privacy

The allure of AI-powered mental health solutions is undeniable, but understanding the extent of data collection is critical for safeguarding your AI therapy privacy.

The Extent of Data Collection

AI therapy apps collect a surprising amount of personal data, often exceeding what users expect. This data fuels the algorithms that power these platforms, but its collection raises significant privacy concerns.

- Voice recordings: Many apps record therapy sessions, analyzing tone, pace, and word choice to gauge emotional state. Examples include Woebot and Youper.

- Text messages: Chat-based therapy apps collect every message exchanged between the user and the AI, including sensitive personal details.

- Location data: Some apps access location information, potentially tracking user movements and activity patterns.

- Emotional responses: Apps monitor user input to assess emotional states, tracking changes in mood and sentiment over time.

- Metadata: Beyond the explicit data, apps also collect metadata – information about the data, such as timestamps, IP addresses, and device information, which can be used to build detailed user profiles.

Data Security and Breaches

The sensitive nature of the data collected by AI therapy apps makes them a prime target for cyberattacks. The industry currently lacks robust security standards, increasing the risk of breaches.

- Examples of data breaches in similar health tech industries highlight the potential vulnerability. Healthcare data breaches are unfortunately common, with significant consequences for those affected.

- The decentralized nature of the AI therapy market, with numerous smaller companies operating independently, exacerbates this issue. A lack of standardized security protocols leaves user data at risk.

Third-Party Data Sharing

Many AI therapy apps share user data with third parties for various purposes, often without explicit user consent or sufficient transparency.

- Data may be shared with advertisers for targeted advertising, potentially exposing users to inappropriate or harmful content.

- Researchers may access anonymized data for research purposes, but the risk of re-identification remains.

- Law enforcement agencies could potentially request access to user data under certain circumstances, potentially violating confidentiality.

- Many privacy policies are opaque and difficult for average users to understand, hindering informed consent.

The Surveillance Aspect of AI Therapy

Beyond data collection, the very nature of AI therapy involves a degree of constant surveillance, raising significant ethical and privacy concerns.

Algorithmic Bias and Profiling

AI algorithms are trained on vast datasets, which may reflect existing societal biases. This can lead to inaccurate or unfair assessments of users.

- For example, an algorithm trained on data predominantly from one demographic may misinterpret the emotional expressions or communication styles of users from other backgrounds.

- The lack of human oversight in AI decision-making makes it challenging to identify and correct these biases.

Constant Monitoring and the Chilling Effect

The constant monitoring inherent in AI therapy can create a "chilling effect," discouraging users from expressing themselves openly and honestly, for fear of judgment or negative consequences.

- Knowing that every word and sentiment is being analyzed may inhibit self-disclosure, hindering the effectiveness of therapy.

- Users may self-censor their thoughts and feelings, preventing them from accessing the full benefits of therapy.

Lack of Transparency and Control

Users often lack transparency into how their data is being used and have limited control over their own information.

- Many AI therapy app privacy policies are lengthy, complex, and difficult to understand.

- Users often have little say in how their data is used or shared, making it difficult to exert control over their privacy.

The Erosion of Trust and the Future of AI Therapy Privacy

The surveillance aspects of AI therapy have the potential to significantly erode trust – both in the therapeutic relationship and in the technology itself.

Impact on the Therapeutic Relationship

Data breaches or the perceived lack of confidentiality can severely damage the therapeutic relationship, hindering the effectiveness of treatment.

- Knowing that their personal struggles and vulnerabilities are potentially accessible to others can significantly impair trust between the user and the therapist (human or AI).

- The confidentiality that is central to effective therapy is threatened by the data collection practices of many AI therapy apps.

Regulatory Gaps and the Need for Reform

The current regulatory landscape for AI therapy is inadequate, necessitating stronger policies to protect user privacy.

- Data minimization – collecting only the data necessary for the service – should be a core principle.

- Stronger data security standards are needed to prevent breaches and protect user data from unauthorized access.

- Independent audits of AI therapy apps should be mandated to ensure compliance with privacy regulations.

Protecting Your Privacy When Using AI Therapy Apps

While enjoying the convenience of AI therapy, you can take steps to mitigate privacy risks:

- Carefully read the privacy policy of any AI therapy app before using it.

- Choose reputable apps with transparent privacy practices and strong security measures.

- Limit data sharing to the absolute minimum required.

- Consider the potential risks before disclosing highly sensitive personal information.

Conclusion

The convenience of AI therapy should not come at the cost of your privacy. The pervasive surveillance and data collection practices of many AI therapy apps raise significant concerns regarding AI therapy privacy. Algorithmic biases, data breaches, and lack of transparency threaten the integrity of the therapeutic relationship and the overall wellbeing of users. We must demand greater transparency from AI therapy providers and advocate for stronger regulations to ensure that your data remains safe and confidential. Don't let the convenience of AI therapy come at the cost of your AI therapy privacy. Demand better protection for your mental health data.

Featured Posts

-

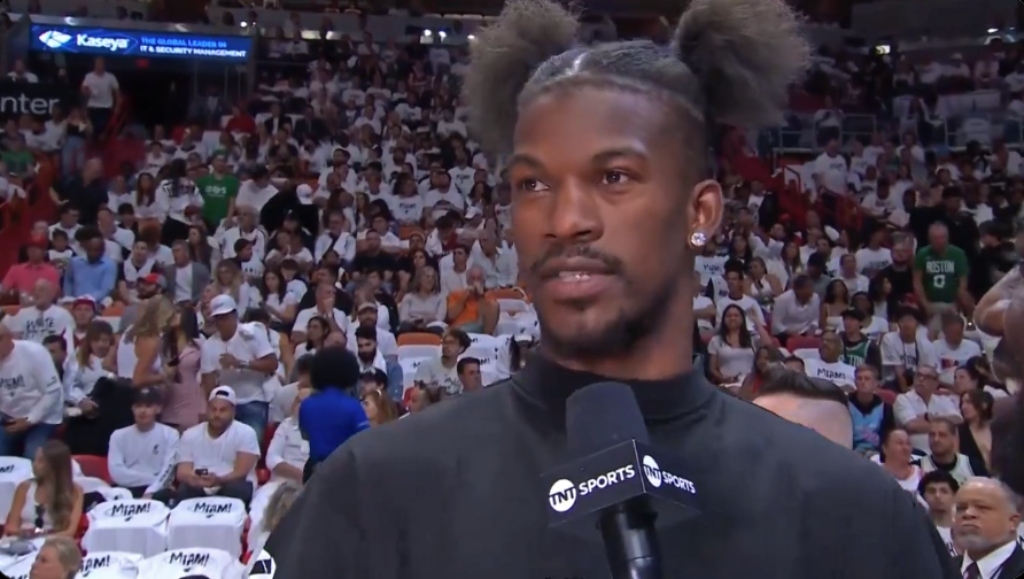

Draymond Greens Honest Assessment Of Jimmy Butler Following Warriors Kings Game

May 15, 2025

Draymond Greens Honest Assessment Of Jimmy Butler Following Warriors Kings Game

May 15, 2025 -

Kibris Isguecue Piyasasi Icin Yeni Dijital Veri Tabani Rehberi

May 15, 2025

Kibris Isguecue Piyasasi Icin Yeni Dijital Veri Tabani Rehberi

May 15, 2025 -

Was Jimmy Butler Overwhelmed Evaluating His Need For Support In The Miami Heat Playoffs

May 15, 2025

Was Jimmy Butler Overwhelmed Evaluating His Need For Support In The Miami Heat Playoffs

May 15, 2025 -

Kibris Sorunu Ve Direkt Ucuslar Tatar In Aciklamalarinin Oenemi

May 15, 2025

Kibris Sorunu Ve Direkt Ucuslar Tatar In Aciklamalarinin Oenemi

May 15, 2025 -

Highway 407 East Tolls Scrapped Ontarios Permanent Gas Tax Cut Plan

May 15, 2025

Highway 407 East Tolls Scrapped Ontarios Permanent Gas Tax Cut Plan

May 15, 2025

Latest Posts

-

Dodgers Offense Falters In Loss To Cubs

May 15, 2025

Dodgers Offense Falters In Loss To Cubs

May 15, 2025 -

Nba Disciplinary Action Anthony Edwards Fined For Inappropriate Fan Interaction

May 15, 2025

Nba Disciplinary Action Anthony Edwards Fined For Inappropriate Fan Interaction

May 15, 2025 -

Anthony Edwards Custody Battle Details Of The Reported Dispute

May 15, 2025

Anthony Edwards Custody Battle Details Of The Reported Dispute

May 15, 2025 -

Anthony Edwards Vulgar Remark To Fan Results In 50 000 Nba Fine

May 15, 2025

Anthony Edwards Vulgar Remark To Fan Results In 50 000 Nba Fine

May 15, 2025 -

Nba Star Anthony Edwards Facing Custody Battle Mothers Response

May 15, 2025

Nba Star Anthony Edwards Facing Custody Battle Mothers Response

May 15, 2025