The Ethics Of AI Therapy In A Surveillance Society

Table of Contents

2.1 Data Privacy and Security in AI-Powered Mental Health Services

The sensitive nature of mental health information demands the utmost protection. AI therapy, involving the collection, analysis, and storage of deeply personal data, presents significant vulnerabilities. The ethics of AI therapy in a surveillance society are fundamentally tied to safeguarding this data.

H3: The Vulnerability of Sensitive Patient Data

Mental health records contain highly personal and often stigmatized information. Breaches can have devastating consequences, leading to identity theft, discrimination, and emotional distress.

- Data breaches: Recent incidents involving healthcare data breaches demonstrate the vulnerability of sensitive information to cyberattacks and unauthorized access.

- Unauthorized access: AI systems, if not properly secured, could be targets for malicious actors seeking to exploit patient data for financial gain or other nefarious purposes.

- Secondary uses of data: Data collected for AI therapy might be repurposed for insurance profiling, marketing, or research without proper consent, raising serious ethical questions.

H3: Regulatory Frameworks and Data Protection

Existing regulations like GDPR (General Data Protection Regulation) and HIPAA (Health Insurance Portability and Accountability Act) offer some protection, but their applicability to AI therapy remains a complex area. Gaps exist, especially concerning the use of AI algorithms and the potential for data aggregation across various platforms.

- Best practices for data encryption: Robust encryption methods are essential to protect data at rest and in transit.

- Data anonymization: Techniques to remove identifying information while preserving data utility can enhance privacy.

- Informed consent: Patients must provide explicit and informed consent regarding the collection, use, and sharing of their data.

H3: Transparency and Control over Data

Transparency and patient control are paramount. Individuals should have clear access to their data, the ability to correct inaccuracies, and the right to request data deletion. This transparency is crucial for addressing the ethics of AI therapy in a surveillance society.

2.2 Algorithmic Bias and Fairness in AI Therapy

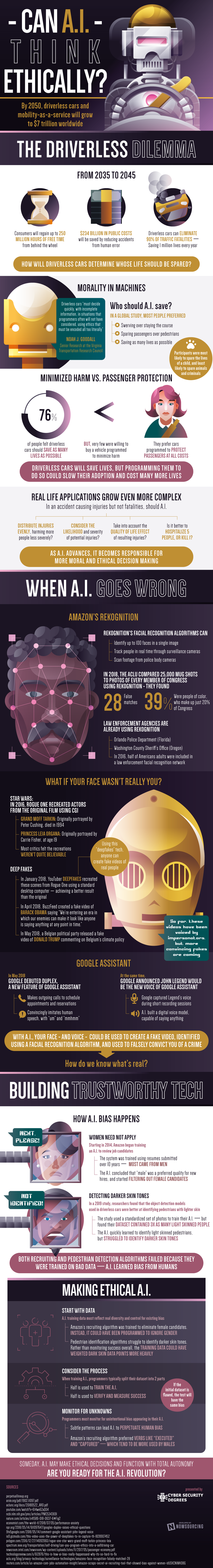

AI algorithms are trained on data, and if that data reflects existing societal biases (e.g., racial, gender, socioeconomic), the resulting algorithms will perpetuate and amplify those biases. This poses serious challenges to fairness and equity in access to and quality of mental healthcare.

H3: The Impact of Biased Algorithms

Biases embedded in AI systems can lead to:

- Unequal access to care: Certain demographic groups might be unfairly excluded from access to AI-powered mental health services.

- Misdiagnosis and inappropriate treatment: Biased algorithms may lead to inaccurate diagnoses and ineffective treatment plans for specific populations.

- Reinforcement of existing inequalities: AI systems can unintentionally exacerbate existing health disparities.

H3: Ensuring Algorithmic Transparency and Accountability

Transparency and accountability mechanisms are essential to mitigate bias:

- Explainable AI (XAI): Developing algorithms that are transparent and understandable, allowing users to understand how decisions are made.

- Audit trails: Maintaining detailed logs of algorithm decisions and data usage to facilitate auditing and identify potential bias.

- Independent reviews: Regular independent reviews of AI systems by experts to ensure fairness and accuracy.

H3: Addressing Algorithmic Bias Through Diverse Datasets

Using diverse and representative datasets for training is crucial to mitigate bias and ensure fair outcomes. This necessitates careful data collection and curation practices.

2.3 The Therapeutic Relationship and the Human Element in AI-Assisted Care

While AI can augment mental healthcare, it cannot replace the crucial human element of the therapeutic relationship. The ethics of AI therapy in a surveillance society depend heavily on maintaining this balance.

H3: Maintaining the Therapeutic Alliance

The therapeutic alliance, built on trust, empathy, and human connection, is fundamental to effective mental health treatment. AI, while helpful, lacks the nuanced understanding and emotional intelligence of a human therapist.

- Limitations of AI in emotional support: AI cannot fully replicate the emotional support and nuanced understanding provided by a human therapist.

- Complex human needs: AI may struggle to address complex human needs that require deep empathy and interpersonal skills.

H3: The Role of Human Oversight and Intervention

Human oversight is critical:

- Therapists and Psychiatrists: Qualified professionals must supervise the use of AI tools, ensuring appropriate interventions and providing necessary human support.

- Ethical guidelines: Clear ethical guidelines for the use of AI in therapy must be developed and implemented.

H3: Avoiding Over-Reliance on AI and Maintaining Human Connection

Over-dependence on AI can be detrimental. Human interaction remains vital for building trust, addressing complex issues, and fostering genuine connection in mental healthcare.

3. Conclusion: Charting a Responsible Path for AI Therapy in a Surveillance Society

The ethics of AI therapy in a surveillance society present a complex challenge. Data privacy, algorithmic bias, and the preservation of the human element are paramount. Strengthening data protection laws, promoting algorithmic transparency, ensuring human oversight, and prioritizing patient autonomy are crucial steps towards responsible AI implementation. Let's work together to ensure that the ethics of AI therapy are prioritized in our increasingly surveillance-driven society. Join the conversation and demand responsible implementation of AI in mental health. Let's build a future where technology enhances, not undermines, the therapeutic relationship and protects vulnerable individuals.

Featured Posts

-

Ray Epps Vs Fox News A Deep Dive Into The January 6th Defamation Lawsuit

May 16, 2025

Ray Epps Vs Fox News A Deep Dive Into The January 6th Defamation Lawsuit

May 16, 2025 -

A Critical Examination Of The King Of Davoss Demise

May 16, 2025

A Critical Examination Of The King Of Davoss Demise

May 16, 2025 -

Congos Cobalt Export Ban Market Impact And The Awaiting Quota Plan

May 16, 2025

Congos Cobalt Export Ban Market Impact And The Awaiting Quota Plan

May 16, 2025 -

Hondas 15 Billion Ev Project In Ontario A Pause In Production

May 16, 2025

Hondas 15 Billion Ev Project In Ontario A Pause In Production

May 16, 2025 -

Predicting The Winner Padres Vs Cubs

May 16, 2025

Predicting The Winner Padres Vs Cubs

May 16, 2025

Latest Posts

-

12 Golov V Pley Off Ovechkin Obnovlyaet Rekord N Kh L

May 16, 2025

12 Golov V Pley Off Ovechkin Obnovlyaet Rekord N Kh L

May 16, 2025 -

Rekord Ovechkina 12 Ya Pozitsiya V Spiske Luchshikh Snayperov Pley Off N Kh L

May 16, 2025

Rekord Ovechkina 12 Ya Pozitsiya V Spiske Luchshikh Snayperov Pley Off N Kh L

May 16, 2025 -

Ovechkin Na 12 M Meste Po Golam V Pley Off N Kh L Noviy Rekord

May 16, 2025

Ovechkin Na 12 M Meste Po Golam V Pley Off N Kh L Noviy Rekord

May 16, 2025 -

Karolina Vashington Itogi Serii Pley Off N Kh L

May 16, 2025

Karolina Vashington Itogi Serii Pley Off N Kh L

May 16, 2025 -

Triumf Tampy Bey Pobeda Nad Floridoy V Pley Off N Kh L

May 16, 2025

Triumf Tampy Bey Pobeda Nad Floridoy V Pley Off N Kh L

May 16, 2025