Understanding AI's Learning Process: Implications For Ethical And Responsible Use

Table of Contents

How AI Learns: Machine Learning Techniques

The foundation of most AI systems lies in machine learning, a process where algorithms learn from data without explicit programming. Several key techniques drive this learning:

-

Supervised Learning: This approach involves training an algorithm on a dataset with labeled inputs and desired outputs. The algorithm learns to map inputs to outputs, enabling it to predict outcomes for new, unseen data. For example, an image recognition system might be trained on thousands of images labeled "cat" or "dog," learning to distinguish between the two.

-

Unsupervised Learning: Unlike supervised learning, this technique uses unlabeled data. The algorithm seeks to identify patterns, structures, or relationships within the data without any predefined categories. A common application is customer segmentation, where an algorithm groups customers based on their purchasing behavior or demographics.

-

Reinforcement Learning: This method involves an agent learning through trial and error. The agent interacts with an environment, receiving rewards for positive actions and penalties for negative ones. Through this feedback loop, the agent learns optimal strategies to maximize its rewards. A classic example is a game-playing AI, which learns to win by experimenting with different moves and receiving rewards for successful strategies.

The quality and nature of the data used in training are paramount. Poor data quality, including missing values or inconsistencies, can significantly hinder the learning process. Critically, biased data can lead to biased AI systems, a subject we will explore next.

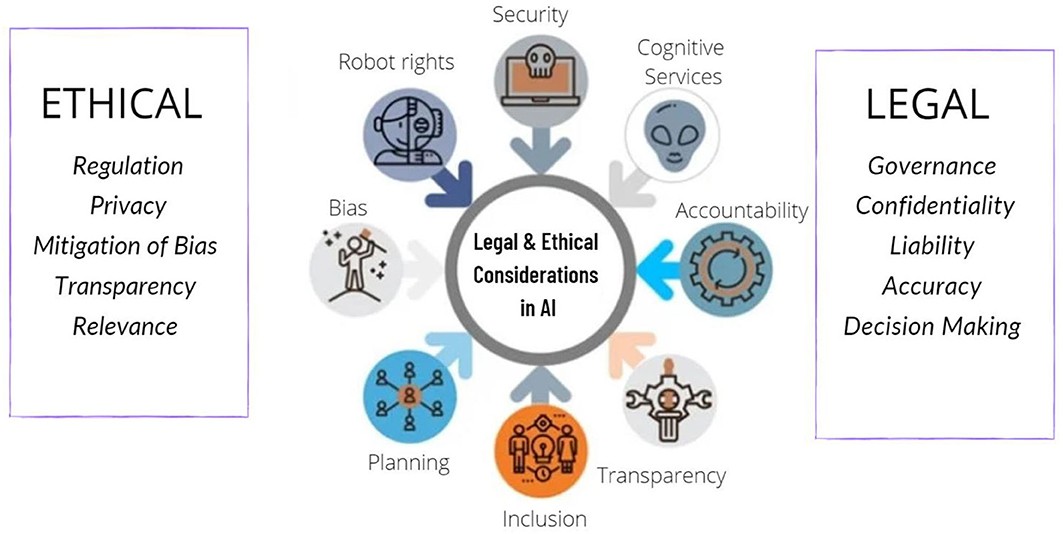

Data Bias and its Ethical Implications

Data bias refers to systematic errors or inaccuracies in data that reflect existing societal biases. These biases can be present in the data collection process, the data labeling process, or even the selection of features used to train the model. The consequences can be severe:

-

Algorithmic bias perpetuating existing societal inequalities: Biased data can lead to AI systems that discriminate against certain groups, reinforcing existing societal biases. For example, a biased facial recognition system might misidentify individuals from underrepresented racial groups more frequently.

-

Examples of biased AI systems in real-world applications: Biased algorithms have been implicated in loan applications, where individuals from certain demographic groups may be unfairly denied credit, and in hiring processes, where resumes from women or minority candidates may be less likely to be selected.

Mitigating bias requires careful attention to data collection, pre-processing, and algorithm design. Techniques such as data augmentation, bias detection algorithms, and fairness-aware machine learning can help address these challenges.

Transparency and Explainability in AI

Many AI systems, particularly deep learning models, operate as "black boxes," making it difficult to understand how they arrive at their decisions. This lack of transparency poses significant ethical challenges:

- The challenge of ethical oversight for "black box" AI: If we don't understand how an AI system makes decisions, it's difficult to ensure that its actions are ethical and fair.

Explainable AI (XAI) aims to address this issue by making AI decision-making processes more transparent and understandable. Techniques for improving AI transparency include:

- Development of explainable models: Creating models whose internal workings are more easily interpretable.

- Auditing AI systems for bias and fairness: Regularly assessing AI systems for potential biases and discriminatory outcomes.

- Implementing mechanisms for human oversight: Designing systems that allow human review and intervention in critical decision-making processes.

Responsible AI Development and Deployment

Responsible AI development and deployment require a holistic approach that considers ethical implications throughout the entire lifecycle of an AI system. This includes:

- Adhering to ethical guidelines and regulations: Following established ethical guidelines and complying with relevant regulations to ensure responsible AI practices.

- Prioritizing human well-being and safety: Designing AI systems that prioritize human well-being and minimize potential risks.

- Considering the societal impact of AI technologies: Conducting thorough impact assessments to understand the potential societal consequences of deploying AI systems.

- Promoting collaboration between researchers, developers, policymakers, and the public: Fostering open dialogue and collaboration among stakeholders to ensure that AI development aligns with societal values.

Understanding AI's Learning Process – A Path Towards Responsible Innovation

Understanding AI's learning process is critical for building ethical and responsible AI systems. Addressing data bias, ensuring transparency, and promoting responsible development practices are essential steps toward mitigating the risks associated with AI and maximizing its benefits. Ignoring these ethical considerations can lead to unintended consequences, perpetuating inequalities and undermining public trust.

To promote responsible innovation, we must actively engage in learning more about AI's learning process and its ethical implications. Continue your education by exploring resources like the AI Now Institute, the Partnership on AI, and various academic publications on AI ethics. Let's work together to advocate for ethical AI practices and ensure that AI technologies benefit all of humanity.

Featured Posts

-

Oval Office Press Conference Trump And Musk To Address Nation On Friday

May 31, 2025

Oval Office Press Conference Trump And Musk To Address Nation On Friday

May 31, 2025 -

Report Exposes The Dangerous Effects Of Climate Whiplash On Global Cities

May 31, 2025

Report Exposes The Dangerous Effects Of Climate Whiplash On Global Cities

May 31, 2025 -

Munguias Revenge Decision Victory Against Bruno Sucher

May 31, 2025

Munguias Revenge Decision Victory Against Bruno Sucher

May 31, 2025 -

Belfoeldi Idojaras Tavaszias Homerseklet Es Valtozo Csapadek

May 31, 2025

Belfoeldi Idojaras Tavaszias Homerseklet Es Valtozo Csapadek

May 31, 2025 -

Building The Good Life A Step By Step Approach

May 31, 2025

Building The Good Life A Step By Step Approach

May 31, 2025