Understanding AI's Learning Process: Towards Responsible AI Practices

Table of Contents

1. The Fundamentals of AI Learning

AI's learning process hinges on various techniques, primarily categorized into supervised, unsupervised, and reinforcement learning. Each approach has its strengths, weaknesses, and unique data requirements.

1.1 Supervised Learning:

Supervised learning involves training an algorithm on a labeled dataset, meaning each data point is tagged with the correct answer. The algorithm learns to map inputs to outputs based on these examples. Consider image recognition, where an algorithm learns to identify cats and dogs by being trained on images labeled "cat" or "dog". Spam filtering is another example; the algorithm learns to classify emails as spam or not spam based on labeled examples.

- Definition: Training an algorithm on labeled data to map inputs to outputs.

- Data Requirements: Labeled datasets, requiring significant human effort for annotation.

- Algorithm Examples: Linear regression, support vector machines (SVMs), decision trees, neural networks.

- Limitations: Relies heavily on the quality and representativeness of the labeled data; struggles with unseen data or data that significantly differs from the training data.

1.2 Unsupervised Learning:

Unlike supervised learning, unsupervised learning deals with unlabeled data. The algorithm identifies patterns, structures, and relationships within the data without explicit guidance. Customer segmentation, grouping customers based on their purchasing behavior, is a classic example. Anomaly detection, identifying unusual patterns in network traffic, is another application.

- Definition: Learning patterns and structures from unlabeled data.

- Data Requirements: Unlabeled datasets, often requiring less human annotation than supervised learning.

- Algorithm Examples: K-means clustering, hierarchical clustering, principal component analysis (PCA).

- Limitations: Interpreting the results can be challenging; the discovered patterns may not always be meaningful or easily explainable.

1.3 Reinforcement Learning:

Reinforcement learning involves an agent interacting with an environment, learning through trial and error. The agent receives rewards or penalties based on its actions, gradually learning an optimal strategy to maximize its cumulative reward. This is evident in game playing (e.g., AlphaGo) and robotics, where an AI learns to navigate a complex environment.

- Definition: Learning through trial and error by interacting with an environment.

- Data Requirements: Data generated through the agent's interactions with the environment.

- Algorithm Examples: Q-learning, SARSA, deep Q-networks (DQNs).

- Limitations: Requires careful design of the reward system; can be computationally expensive and slow to converge.

2. Data: The Fuel for AI Learning

Data is the lifeblood of AI. However, the quality and nature of data significantly impact the performance and ethical implications of AI systems.

2.1 Data Bias and its Impact:

Biased datasets, reflecting societal biases, lead to AI systems perpetuating and even amplifying those biases. For instance, a facial recognition system trained primarily on images of white faces may perform poorly on individuals with darker skin tones.

- Types of Bias: Gender bias, racial bias, socioeconomic bias, geographical bias.

- Consequences of Biased AI: Unfair or discriminatory outcomes, erosion of trust, societal harm.

- Mitigation Strategies: Data augmentation (adding more diverse data), algorithmic fairness techniques, careful data curation and preprocessing.

2.2 Data Privacy and Security:

Training AI models often involves processing vast amounts of personal data, raising serious privacy and security concerns.

- Data Anonymization Techniques: Differential privacy, k-anonymity, data masking.

- GDPR Compliance: Adhering to regulations like the General Data Protection Regulation (GDPR) is crucial.

- Ethical Considerations: Transparency about data usage, informed consent, data minimization.

3. The Black Box Problem and Explainable AI (XAI)

Many AI models, particularly deep neural networks, are often referred to as "black boxes" due to the opacity of their decision-making processes. Understanding why an AI system arrived at a particular conclusion is critical for trust and accountability.

3.1 Understanding AI Decision-Making:

The lack of transparency in AI decision-making raises concerns about fairness, accountability, and potential bias. Explainable AI (XAI) aims to address this challenge.

- Limitations of "Black Box" Models: Difficulty in debugging, identifying biases, and building trust.

- The Need for Transparency and Accountability: Ensuring fairness and avoiding unintended consequences.

- Benefits of Explainable AI: Improved understanding, increased trust, better debugging and bias detection.

3.2 Techniques for Enhancing Explainability:

Several techniques are being developed to make AI more transparent.

- LIME (Local Interpretable Model-agnostic Explanations): Explains individual predictions.

- SHAP (SHapley Additive exPlanations): Assigns contributions to features in a prediction.

- Feature Importance Analysis: Identifies the most influential features in a model's decision-making.

4. Responsible AI Practices

Developing and deploying AI responsibly requires a commitment to ethical considerations and robust practices throughout the AI lifecycle.

4.1 Ethical Considerations in AI Development:

Ethical considerations must be integrated into every stage of AI development.

- Bias Mitigation: Actively addressing and mitigating biases in data and algorithms.

- Fairness: Ensuring equitable outcomes for all groups.

- Accountability: Establishing mechanisms for holding developers and deployers accountable.

- Transparency: Making AI systems and their decision-making processes understandable.

- Privacy: Protecting the privacy of individuals whose data is used to train and operate AI systems.

4.2 Building Responsible AI Systems:

Building responsible AI systems requires a multi-faceted approach.

- Regular Audits: Periodically assessing AI systems for bias, fairness, and adherence to ethical guidelines.

- Human Oversight: Maintaining human control and oversight of AI systems, especially in high-stakes applications.

- Ongoing Monitoring: Continuously monitoring AI systems' performance and impact.

- Continuous Improvement: Regularly updating and improving AI systems based on feedback and new data.

Conclusion:

Understanding AI's learning process is crucial for fostering responsible AI development. We've explored the fundamentals of AI learning, the critical role of data, the challenges of the "black box" problem, and the importance of ethical considerations. Data quality, bias mitigation, and transparency are paramount in building trustworthy and beneficial AI systems. Deepen your understanding of AI's learning process and contribute to building a future where AI benefits all of humanity, promoting responsible AI learning for a better tomorrow.

Featured Posts

-

Former Nypd Commissioner Bernard Kerik Hospitalized Expected To Recover Fully

May 31, 2025

Former Nypd Commissioner Bernard Kerik Hospitalized Expected To Recover Fully

May 31, 2025 -

Reeves Economic Policies Echoes Of Scargills Militancy

May 31, 2025

Reeves Economic Policies Echoes Of Scargills Militancy

May 31, 2025 -

Faizan Zaki Secures Scripps National Spelling Bee Championship

May 31, 2025

Faizan Zaki Secures Scripps National Spelling Bee Championship

May 31, 2025 -

Finding Your Good Life Practical Tips And Techniques

May 31, 2025

Finding Your Good Life Practical Tips And Techniques

May 31, 2025 -

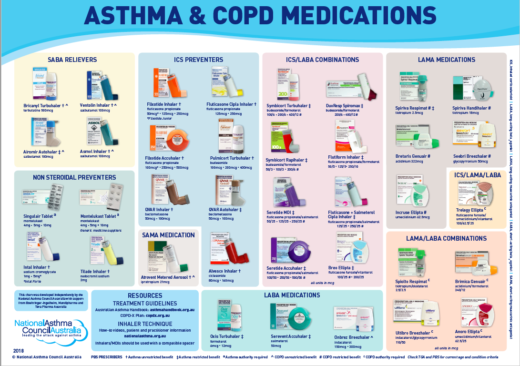

Sanofis Commitment To Respiratory Health Asthma Breakthroughs And Copd Initiatives

May 31, 2025

Sanofis Commitment To Respiratory Health Asthma Breakthroughs And Copd Initiatives

May 31, 2025