Why AI Doesn't Truly Learn And How This Impacts Its Application

Table of Contents

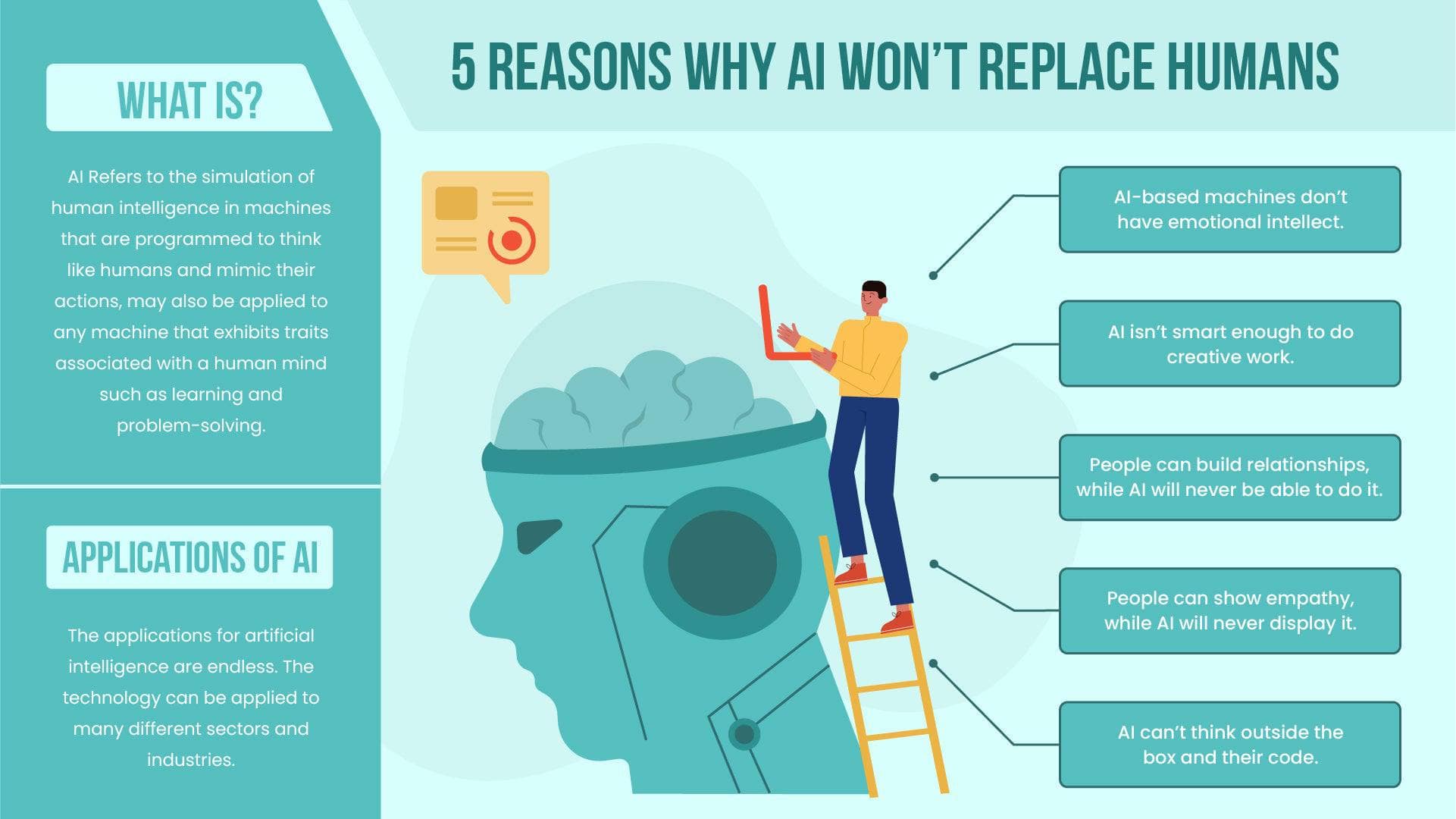

The Difference Between AI and Human Learning

The core difference between AI and human learning lies in the process itself. While AI excels at pattern recognition and prediction based on vast datasets, human learning encompasses a far richer tapestry of experience, context, intuition, and reasoning. Consider these key distinctions:

-

Data vs. Experience: AI relies heavily on structured data and algorithms for learning. Humans, on the other hand, learn through diverse experiences, incorporating contextual information and even emotions into their understanding. Machine learning, a core component of AI, thrives on data; human intelligence thrives on experience. Deep learning, a subfield of machine learning, further emphasizes the processing of vast datasets.

-

Generalization vs. Specialization: AI struggles with generalization. A machine learning model trained to recognize cats in one dataset might fail to recognize cats in another, slightly different dataset. Humans, however, can readily adapt and generalize knowledge from one situation to another. This adaptive capacity is a hallmark of human cognitive abilities that AI currently lacks.

-

Reasoning and Nuance: AI often struggles with common sense reasoning and nuanced understanding. While AI can outperform humans in specific tasks, it often lacks the contextual awareness and intuitive grasp that humans possess. This is where the limitations of artificial intelligence become apparent when compared to true human intelligence.

The Limitations of Current AI Learning Methods

Despite impressive advancements in machine learning and deep learning, current AI learning methods face significant limitations:

-

Overfitting: AI models can become overly specialized to their training data, performing exceptionally well on that data but poorly on any unseen data. This overfitting severely restricts their ability to generalize and apply knowledge to new situations.

-

Data Bias: AI systems are only as good as the data they are trained on. Biased training data inevitably leads to biased AI outputs, perpetuating and even amplifying existing societal inequalities. This algorithmic bias poses a considerable ethical challenge.

-

Lack of Explainability: A major hurdle in trusting and deploying AI is the "black box" nature of many models. It's often difficult, if not impossible, to understand why an AI model arrives at a particular decision. This lack of explainability (often addressed under the umbrella of Explainable AI or XAI) undermines trust and accountability. Improving AI transparency is crucial. Model interpretability is a key area of ongoing research.

The Impact of AI's "Non-Learning" on its Applications

The limitations of current AI learning methods have real-world consequences across numerous sectors:

-

Healthcare: Biased or insufficient training data can lead to misdiagnosis and flawed treatment recommendations by AI-powered diagnostic tools.

-

Finance: Algorithmic trading errors, stemming from AI's inability to fully grasp market nuances, can result in significant financial losses.

-

Autonomous Vehicles: AI's struggle with handling unexpected situations can lead to accidents, hindering the widespread adoption of self-driving cars.

-

Criminal Justice: Biased AI tools used in sentencing or risk assessment can perpetuate unfair and discriminatory outcomes, raising significant ethical concerns. The use of AI in such sensitive areas requires careful consideration of AI ethics and AI safety.

The Need for More Robust and Ethical AI Development

Addressing the limitations of current AI requires a concerted effort to develop more robust and ethical AI systems. This involves:

-

Investing in research on Explainable AI (XAI): Developing more transparent and interpretable AI models is crucial for building trust and accountability.

-

Developing methods for detecting and mitigating bias in data: Careful data curation and the development of bias-mitigation techniques are essential for ensuring fair and equitable AI applications.

-

Establishing ethical guidelines for AI development and deployment: Clear guidelines and regulations are necessary to govern the responsible development and use of AI technologies. AI governance is critical.

-

Promoting collaboration between AI researchers, policymakers, and ethicists: A multidisciplinary approach is essential for navigating the complex ethical and societal implications of AI. AI regulation should be informed by experts from various fields.

Conclusion: Rethinking AI's "Learning" Capabilities and Their Applications

The core argument of this article is that while AI exhibits impressive performance in specific tasks, its learning differs fundamentally from human learning. Current AI approaches suffer from limitations such as overfitting, data bias, and a lack of explainability, leading to significant consequences across various applications. The "non-learning" nature of AI impacts its trustworthiness and reliability, necessitating a critical re-evaluation of its capabilities and the ethical implications of its deployment. We must move towards responsible AI development, focusing on true AI learning – or at least a much closer approximation – to harness AI's potential while mitigating its risks. To learn more about responsible AI development and the ethical considerations in AI deployment, explore resources on AI ethics and AI governance. Understanding the limitations of AI learning is crucial for building a future where AI benefits humanity safely and equitably.

Featured Posts

-

Star Trek Strange New Worlds Season 3 Teaser Breakdown Plot Points And Speculation

May 31, 2025

Star Trek Strange New Worlds Season 3 Teaser Breakdown Plot Points And Speculation

May 31, 2025 -

Gestion Du Recul Du Trait De Cote A Saint Jean De Luz Ameliorer La Protection Du Littoral

May 31, 2025

Gestion Du Recul Du Trait De Cote A Saint Jean De Luz Ameliorer La Protection Du Littoral

May 31, 2025 -

The Dangerous Impacts Of Climate Whiplash A Global Urban Crisis

May 31, 2025

The Dangerous Impacts Of Climate Whiplash A Global Urban Crisis

May 31, 2025 -

The Fentanyl Report On Princes Death March 26 2016

May 31, 2025

The Fentanyl Report On Princes Death March 26 2016

May 31, 2025 -

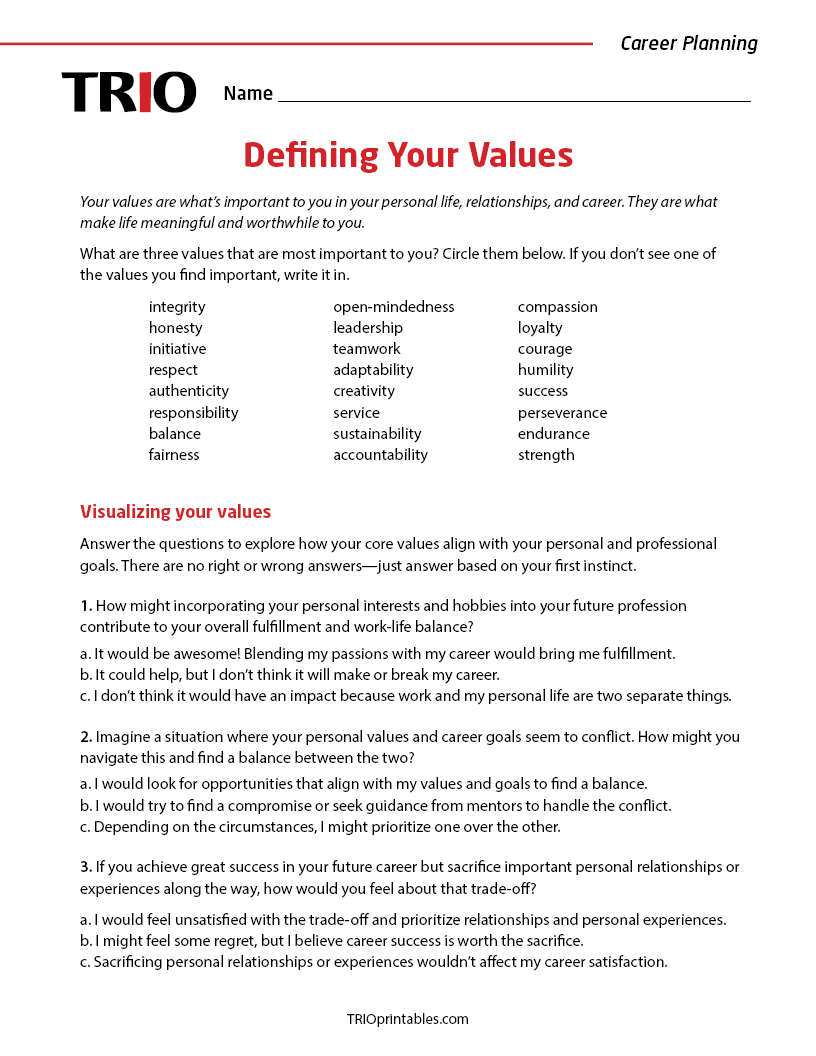

Understanding The Good Life Defining Your Values And Priorities

May 31, 2025

Understanding The Good Life Defining Your Values And Priorities

May 31, 2025