Revolutionizing Voice Assistant Development: OpenAI's New Tools

Table of Contents

Enhanced Natural Language Understanding (NLU) with OpenAI's Models

OpenAI's advanced language models are significantly improving the accuracy and nuance of voice assistant comprehension. These models move beyond simple keyword matching, enabling a deeper understanding of user intent and context. This leads to more natural and helpful interactions.

- Improved intent recognition leading to more accurate responses: OpenAI's models excel at discerning the true meaning behind user requests, even with ambiguous phrasing or colloquialisms. This results in fewer misunderstandings and more accurate responses. For example, instead of just recognizing the keyword "weather," the model can understand the nuances of a request like "What's the chance of rain this afternoon?"

- Enhanced contextual understanding for more natural conversations: These models can maintain context throughout a conversation, remembering previous interactions and using that information to inform subsequent responses. This creates a more fluid and human-like conversational experience. The assistant can understand the implications of a multi-turn dialogue, leading to more relevant and helpful answers.

- Support for multiple languages and dialects: OpenAI's models are trained on massive multilingual datasets, enabling voice assistants to understand and respond in a wide range of languages and dialects. This significantly expands the reach and accessibility of voice assistant technology.

- Reduced reliance on keyword matching, enabling more flexible interactions: Unlike older systems that heavily rely on keyword matching, OpenAI's models understand the semantic meaning of words and phrases, allowing for more flexible and natural language interactions. Users can express themselves more freely, without needing to adhere to rigid commands.

- Examples of specific OpenAI models used (e.g., GPT-3, Whisper): Models like GPT-3 provide powerful natural language processing capabilities, enabling sophisticated understanding of user requests. Whisper, OpenAI's speech-to-text model, offers high-accuracy transcription, even in noisy environments, providing a solid foundation for voice input.

Building More Engaging and Personalized Voice Interactions

OpenAI's technology is instrumental in creating personalized voice assistant experiences tailored to individual users. By leveraging user data and machine learning, developers can build assistants that adapt and evolve over time.

- Leveraging user data to personalize responses and recommendations: OpenAI's models can process and analyze user data to personalize responses and provide customized recommendations. This could include suggesting relevant information based on the user's past queries or preferences.

- Using machine learning to adapt to user preferences and behavior: Machine learning algorithms learn from user interactions, continuously improving the assistant's ability to understand and respond to individual needs. This leads to a more tailored and satisfying experience.

- Creating more engaging conversational flows that feel natural and human-like: OpenAI's models enable the creation of more natural and engaging conversations. The assistant can use humor, empathy, and personality to create a more enjoyable user experience.

- Integration with other OpenAI tools for richer user experiences (e.g., DALL-E for image generation): Integrating OpenAI's various tools, such as DALL-E for image generation, allows for the creation of truly multimodal voice assistants. This means the assistant can not only understand and respond to voice commands but also generate images, text, and other media, making interactions even more dynamic and immersive.

Simplifying Voice Assistant Development with OpenAI APIs

OpenAI's APIs significantly simplify the process of voice assistant development. They provide developers with easy-to-use tools and resources, reducing development time and costs.

- Reduced development time and costs: The APIs offer pre-trained models and readily available functions, significantly reducing the need for custom development. This translates to faster development cycles and lower overall costs.

- Easy integration with existing platforms and applications: OpenAI's APIs are designed for seamless integration with existing platforms and applications. Developers can easily incorporate these powerful capabilities into their existing projects.

- Comprehensive documentation and support: OpenAI provides extensive documentation and support to help developers successfully integrate and use its APIs. This simplifies the development process and ensures a smooth experience.

- Examples of specific OpenAI APIs used for voice assistant development: The OpenAI API provides access to a range of models, including those for natural language processing, speech-to-text, and text-to-speech, providing a complete toolkit for voice assistant development.

- Discussion of scalability and cost-effectiveness: OpenAI's APIs are designed for scalability, allowing developers to easily handle increasing numbers of users and requests. The pricing model is also cost-effective, especially considering the reduced development time and resources required.

Addressing Ethical Considerations in Voice Assistant Development

While OpenAI's tools offer powerful capabilities, it's crucial to address the ethical implications of their use in voice assistants. Responsible development requires proactive consideration of potential issues.

- Bias mitigation in training data: Addressing biases present in training data is critical to ensure fairness and prevent discriminatory outcomes. OpenAI is actively working on mitigating bias in its models.

- Privacy concerns and data security: Protecting user privacy and ensuring data security is paramount. Robust security measures and transparent data handling practices are essential.

- Responsible use of AI and avoiding potential misuse: Developers must be mindful of the potential for misuse and take steps to prevent harmful applications of the technology.

- Transparency and user control: Users should have transparency into how their data is used and should have control over their privacy settings.

Conclusion

OpenAI's innovative tools are dramatically reshaping the landscape of voice assistant development, offering developers powerful new capabilities to create more intelligent, personalized, and engaging voice experiences. The advancements in NLU, personalization, and accessible APIs are key drivers of this revolution. However, ethical considerations remain paramount and must be addressed proactively.

Call to Action: Ready to revolutionize your own voice assistant development? Explore OpenAI's suite of tools and APIs today and unlock the potential of truly intelligent voice interaction. Learn more about using OpenAI for voice assistant development and join the future of conversational AI!

Featured Posts

-

Dechiffrage Economique La Resistance De L Euro Malgre Les Pressions

May 11, 2025

Dechiffrage Economique La Resistance De L Euro Malgre Les Pressions

May 11, 2025 -

Usmnt Weekend Roundup Dests Return And Pulisics Heroics

May 11, 2025

Usmnt Weekend Roundup Dests Return And Pulisics Heroics

May 11, 2025 -

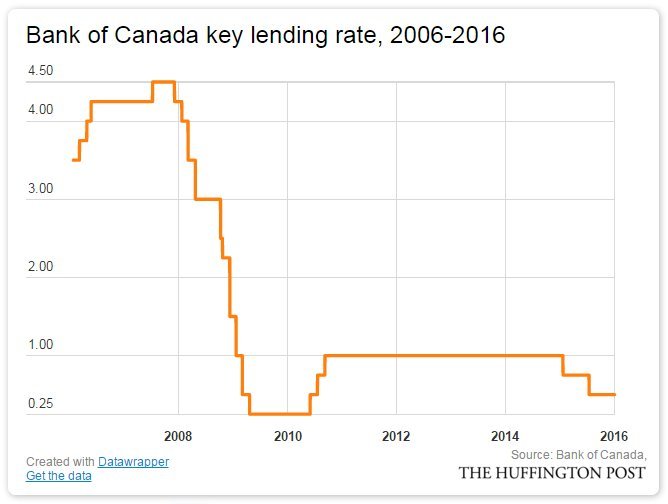

Bank Of Canada Rate Cuts Economists Predict Renewed Interest Rate Reductions Amidst Tariff Job Losses

May 11, 2025

Bank Of Canada Rate Cuts Economists Predict Renewed Interest Rate Reductions Amidst Tariff Job Losses

May 11, 2025 -

Is Payton Pritchard The Next Celtics Sixth Man Of The Year

May 11, 2025

Is Payton Pritchard The Next Celtics Sixth Man Of The Year

May 11, 2025 -

Potential Changes To Migrant Detention Review Under Trump

May 11, 2025

Potential Changes To Migrant Detention Review Under Trump

May 11, 2025