The Surveillance Potential Of AI Therapy: A Threat To Civil Liberties?

Table of Contents

Data Collection and Privacy Concerns in AI Therapy

The allure of AI therapy lies in its ability to provide personalized and accessible mental healthcare. However, this accessibility comes at a cost: the extensive collection of sensitive personal data. AI therapy platforms gather a vast amount of information, raising serious concerns about data privacy and security.

The Extensive Data Collected by AI Therapy Platforms

AI therapy apps and platforms collect various data points to personalize treatment and improve their algorithms. This includes:

- Voice recordings: Detailed audio recordings of therapy sessions, capturing nuances of tone, emotion, and speech patterns.

- Text messages: All written communication between the user and the AI, including personal thoughts, feelings, and experiences.

- Behavioral patterns: Data on user engagement, frequency of use, and response times, revealing patterns of behavior and emotional state.

- Personal information: Demographic details, medical history (often self-reported), and other personal identifiers.

This data is used to train algorithms, improve the AI's therapeutic capabilities, and potentially for research purposes. However, the vulnerability of this sensitive information to breaches and unauthorized access is a significant concern. The lack of transparency regarding data usage and storage policies further exacerbates these risks. Keywords like "data privacy," "patient data security," "AI data breaches," and "sensitive information" highlight the critical nature of these issues.

Potential for Misuse and Abuse of AI Therapy Data

The wealth of personal data collected by AI therapy platforms creates a potential for misuse and abuse, posing a serious threat to individual civil liberties.

Surveillance by Employers or Insurers

The data gathered during AI therapy sessions could be accessed and utilized by third parties for purposes far removed from therapeutic care. Employers, for instance, might use this information to discriminate against employees perceived as having mental health issues. Similarly, insurance companies could deny coverage or raise premiums based on the data revealed through AI therapy interactions.

- Examples of potential misuse: Denial of employment opportunities, increased insurance premiums, exclusion from certain benefits.

- Lack of legal protection: Current legal frameworks may not adequately protect individuals from this type of discrimination based on data from AI therapy platforms.

- Ethical implications: The ethical implications of using AI therapy data for purposes beyond treatment are profound and warrant serious consideration.

This potential for "surveillance capitalism," where personal data is commodified and used for profit or control, necessitates a careful examination of "data misuse," "AI ethics," "surveillance capitalism," "discrimination," and "insurance implications" in the context of AI therapy.

Regulatory Gaps and the Need for Stronger Protections

A significant challenge in addressing the civil liberties concerns surrounding AI therapy is the absence of comprehensive regulations.

The Absence of Comprehensive Regulations

Existing data protection laws like GDPR (General Data Protection Regulation) and HIPAA (Health Insurance Portability and Accountability Act) offer some protection, but their applicability and effectiveness in the context of AI therapy are limited. These laws were not designed to address the unique challenges posed by AI's capabilities and the scale of data collection.

- Limitations of existing laws: These laws may not fully address the complexities of AI algorithms, data anonymization techniques, and the potential for data misuse by third parties.

- Need for specific regulations: Tailored regulations specific to AI therapy are needed to establish clear guidelines for data collection, storage, use, and security.

- Role of regulatory bodies: Government agencies and professional organizations must play a crucial role in developing and enforcing these regulations. This requires collaboration between policymakers, technology developers, and mental health professionals.

Keywords like "data protection laws," "AI regulation," "privacy legislation," "ethical guidelines," and "regulatory frameworks" underscore the urgent need for action in this area.

Balancing Innovation with Privacy in AI Therapy

The goal is not to stifle innovation in AI therapy but to ensure that its benefits are realized without sacrificing fundamental civil liberties.

Exploring Solutions for Enhanced Privacy and Security

Several strategies can mitigate the risks associated with AI therapy while preserving its therapeutic potential:

- Data anonymization and encryption: Advanced techniques can protect patient data while still allowing for the use of this data for research and algorithm improvement.

- Informed consent and transparency: Users must be fully informed about what data is collected, how it is used, and who has access to it. Transparency in data handling practices is paramount.

- Privacy-preserving AI techniques: Developing AI algorithms that inherently respect privacy is crucial.

- Independent audits: Regular independent audits of AI therapy platforms can help ensure compliance with privacy standards and identify potential vulnerabilities.

Keywords like "data security," "data anonymization," "encryption," "informed consent," and "privacy-preserving AI" highlight these essential steps towards a more secure and ethical future for AI therapy.

Conclusion

The extensive data collection inherent in AI therapy, the potential for misuse of this data, and the current absence of robust regulatory frameworks create significant threats to individual civil liberties. While AI therapy holds immense promise for improving access to mental healthcare, realizing its benefits without compromising our fundamental rights requires proactive and comprehensive action.

The promise of AI-powered therapy is undeniable, but realizing its benefits without compromising our civil liberties requires vigilance. Let's demand responsible development and regulation of AI therapy, advocating for stronger data protection laws and greater transparency from AI therapy providers to ensure a future where technological advancement and individual rights coexist. We must strive for a future where artificial intelligence therapy improves mental health without compromising the privacy and freedom of its users.

Featured Posts

-

Predicting The Top Baby Names For 2024 Classic And Modern Picks

May 15, 2025

Predicting The Top Baby Names For 2024 Classic And Modern Picks

May 15, 2025 -

E Bay Faces Legal Action Over Banned Chemicals Section 230 At Issue

May 15, 2025

E Bay Faces Legal Action Over Banned Chemicals Section 230 At Issue

May 15, 2025 -

Jimmy Butler Heat Tensions Hall Of Famer Weighs In On Jersey Number Dispute

May 15, 2025

Jimmy Butler Heat Tensions Hall Of Famer Weighs In On Jersey Number Dispute

May 15, 2025 -

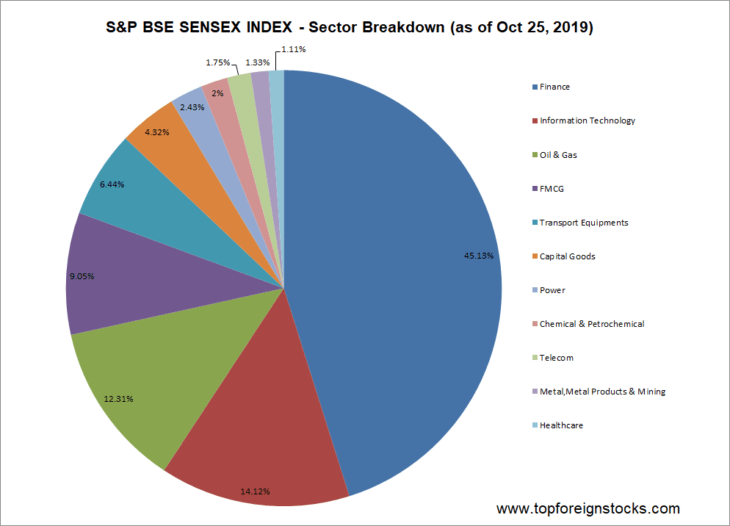

Sensex Soars Top Bse Stocks Gaining Over 10

May 15, 2025

Sensex Soars Top Bse Stocks Gaining Over 10

May 15, 2025 -

Los Angeles Dodgers Offseason Review Key Moves And Outlook

May 15, 2025

Los Angeles Dodgers Offseason Review Key Moves And Outlook

May 15, 2025

Latest Posts

-

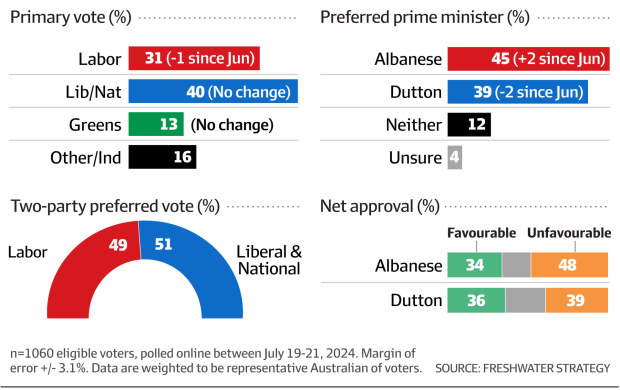

Australias Election Contrasting Visions From Albanese And Dutton

May 15, 2025

Australias Election Contrasting Visions From Albanese And Dutton

May 15, 2025 -

Albanese And Dutton Face Off Analyzing Their Key Policy Proposals

May 15, 2025

Albanese And Dutton Face Off Analyzing Their Key Policy Proposals

May 15, 2025 -

Albanese Vs Dutton A Pivotal Election Pitch

May 15, 2025

Albanese Vs Dutton A Pivotal Election Pitch

May 15, 2025 -

Australias Election A Head To Head Comparison Of Albanese And Duttons Platforms

May 15, 2025

Australias Election A Head To Head Comparison Of Albanese And Duttons Platforms

May 15, 2025 -

Election 2024 Dissecting The Key Policy Pitches Of Albanese And Dutton

May 15, 2025

Election 2024 Dissecting The Key Policy Pitches Of Albanese And Dutton

May 15, 2025