I/O Or Io: Which Approach Will Define The Future Of AI? (Google Vs OpenAI)

Table of Contents

1. Introduction: The I/O vs. io Debate in AI's Future

The rapid advancements in AI are fueled by the efficient processing of massive datasets. This processing, the very lifeblood of AI, hinges on how effectively data is ingested, manipulated, and outputted – a process fundamentally defined by I/O operations. The "I/O or io" debate focuses on two distinct philosophies: Google's emphasis on optimizing I/O within its massive data infrastructure and OpenAI's concentration on refining the core AI models themselves, impacting how data interacts with the system (represented here by "io"). This article aims to dissect these contrasting strategies and analyze their potential influence on the trajectory of AI development.

2. Google's Approach: A Data-Centric I/O Model

H2: Google's Massive Data Infrastructure and I/O Optimization:

Google's approach to AI is deeply rooted in its unparalleled data infrastructure. They possess an enormous repository of information, and their strategy centers on building systems that can efficiently manage, process, and retrieve this data. This requires a robust I/O system.

- Large-scale data centers and cloud infrastructure: Google's global network of data centers provides the backbone for its AI operations, handling immense data volumes with minimal latency.

- Advanced algorithms for data ingestion, processing, and retrieval: Sophisticated algorithms are crucial for handling the sheer scale of data, ensuring that information can be accessed and processed quickly and effectively.

- Emphasis on scalability and low-latency I/O: Google prioritizes systems that can scale to handle exponentially growing datasets without compromising speed. Low-latency I/O is paramount for real-time applications.

- Examples of Google's I/O-intensive AI applications: Google Search, Google Translate, and other services heavily rely on efficient I/O to deliver rapid and accurate results. The speed and accuracy of these applications depend on the underlying I/O architecture.

H2: TensorFlow and the I/O Pipeline:

TensorFlow, Google's open-source machine learning framework, is integral to its I/O strategy. It's designed to manage the complexities of data flow within the AI pipeline.

- Efficient data loading and preprocessing capabilities: TensorFlow provides tools to efficiently load, clean, and prepare data for model training, reducing I/O bottlenecks.

- Integration with distributed computing frameworks: TensorFlow seamlessly integrates with distributed computing frameworks, allowing for parallel processing of massive datasets across multiple machines.

- Support for various data formats and storage systems: It supports a wide range of data formats and can interface with various storage systems, increasing flexibility and efficiency.

- Optimizations for minimizing I/O bottlenecks: TensorFlow incorporates optimizations to minimize the time spent on I/O operations, maximizing the efficiency of the overall AI pipeline.

H2: Limitations of Google's I/O-centric Strategy:

While Google's data-centric approach has yielded impressive results, it's not without limitations.

- Data bias and fairness concerns: The sheer scale of data can amplify existing biases, leading to unfair or discriminatory outcomes from AI systems.

- Computational costs associated with processing massive datasets: Processing massive datasets is computationally expensive, requiring significant energy and resources.

- Dependence on infrastructure and scalability challenges: Google's approach relies heavily on its vast infrastructure; maintaining and scaling this infrastructure presents significant challenges.

3. OpenAI's Approach: The io of Model-Centric AI

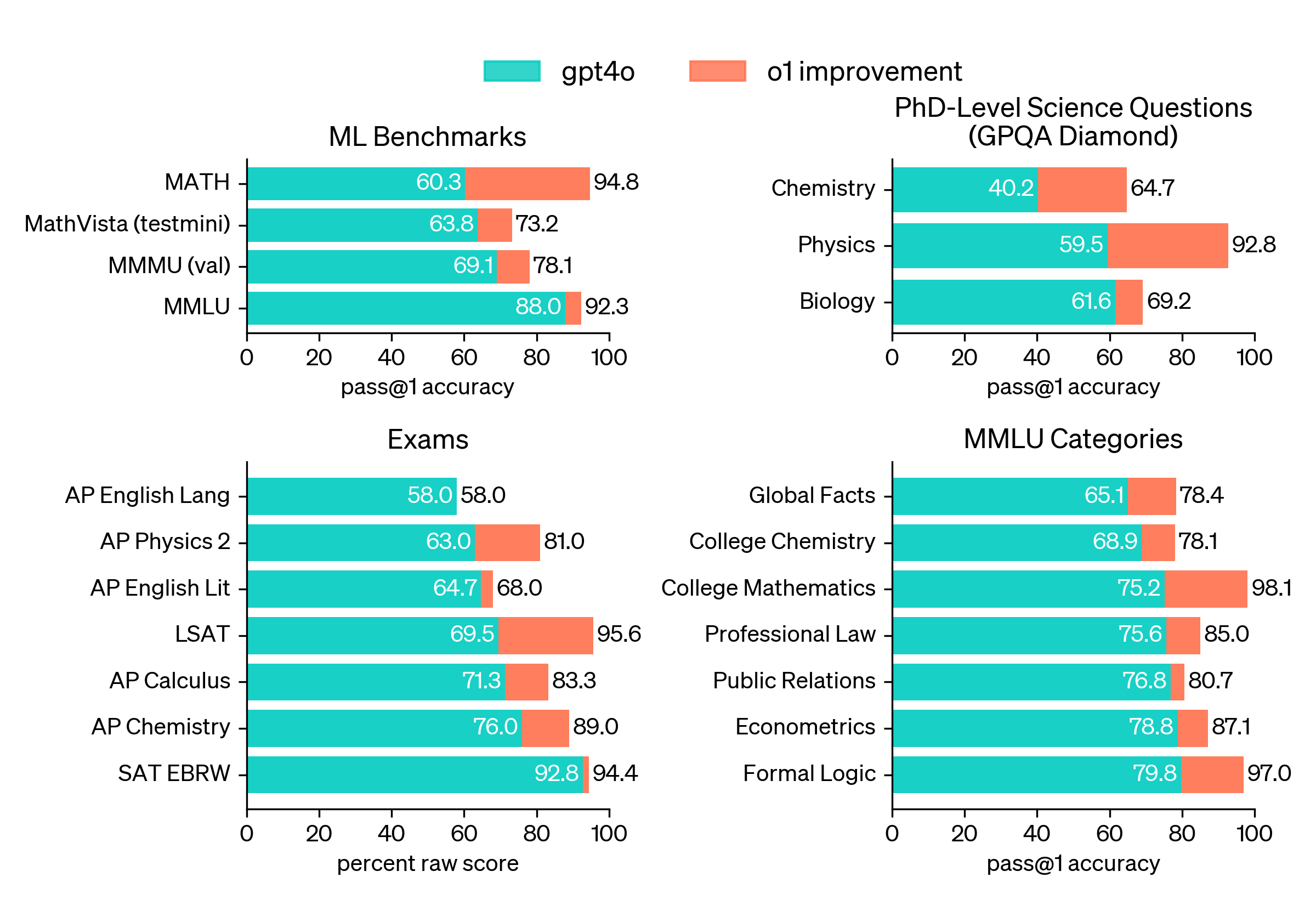

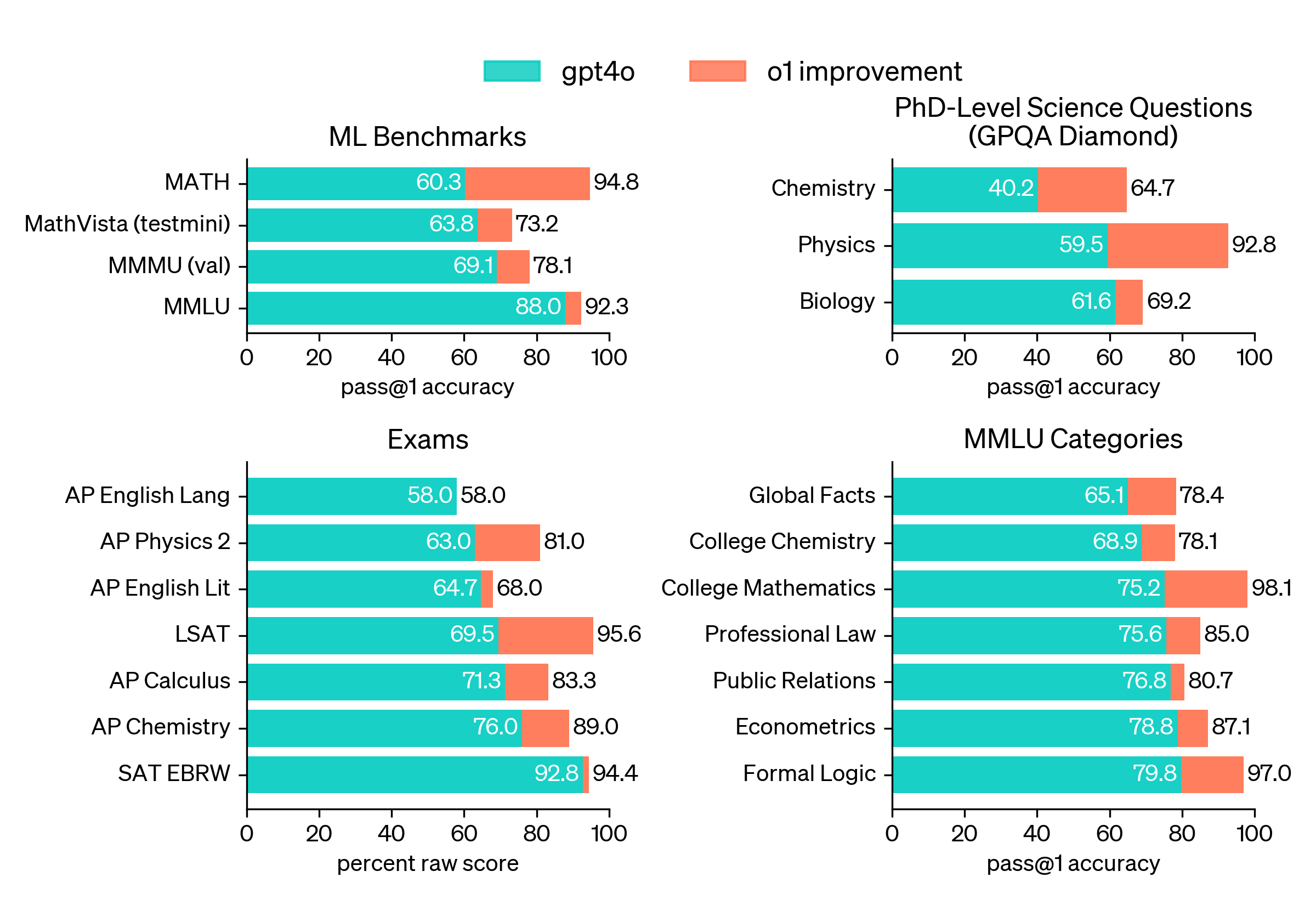

H2: OpenAI's Focus on Model Architecture and Performance:

OpenAI's strategy contrasts sharply with Google's. They focus on developing sophisticated model architectures, prioritizing model efficiency and reducing computational requirements.

- Focus on improving model efficiency and reducing computational requirements: OpenAI invests heavily in research to create more efficient models that can perform complex tasks with fewer resources.

- Exploration of novel model architectures and training techniques: They continually explore new model architectures and training techniques to improve model performance and reduce the reliance on massive datasets.

- Emphasis on general-purpose AI models adaptable to various tasks: OpenAI aims to create general-purpose models that can be adapted to a wide range of tasks, reducing the need for specialized models for each application.

- Examples of OpenAI's model-centric AI applications: GPT-3, DALL-E, and other models showcase OpenAI's focus on creating powerful, adaptable AI models.

H2: The Role of I/O in OpenAI's Ecosystem:

Even with a model-centric focus, I/O remains crucial for OpenAI. However, their approach differs significantly from Google's.

- API-driven access to models: OpenAI provides API access to its models, simplifying I/O interactions for developers and users.

- Data augmentation and fine-tuning techniques: OpenAI uses data augmentation and fine-tuning techniques to improve model performance without requiring massive datasets.

- Focus on user-friendly interfaces and seamless I/O interactions: They prioritize user experience, making interaction with their models as seamless as possible.

H2: Challenges of OpenAI's Model-centric Strategy:

OpenAI's model-centric strategy also presents its own set of challenges.

- Ethical concerns related to AI bias and misuse: Powerful AI models can be misused, and mitigating bias remains a significant concern.

- High computational costs associated with training and deploying large models: Training and deploying large language models still requires substantial computational resources.

- Explainability and transparency of complex AI models: Understanding how complex AI models arrive at their conclusions remains a significant challenge.

4. Comparing I/O and io: A Look to the Future

H2: The Synergistic Potential of Both Approaches:

The optimal path forward might lie in combining the strengths of both approaches.

- Leveraging large datasets for model training and enhancement: Large datasets can be used to train and improve the performance of OpenAI-style models.

- Improving I/O efficiency for better model deployment and accessibility: Optimizing I/O can enhance the accessibility and usability of AI models.

- Developing more robust and ethical AI systems: Combining approaches can lead to more robust and ethical AI systems.

H2: The Long-Term Implications for AI Development:

The "I/O or io" debate will shape the future of AI in profound ways.

- Advancements in AI capabilities and applications: Both approaches will drive advancements in AI capabilities and lead to new applications across various fields.

- Ethical considerations and responsible AI development: Addressing ethical concerns and promoting responsible AI development will be crucial.

- Economic and societal impacts of advanced AI technologies: Advanced AI technologies will significantly impact economies and societies, requiring careful planning and management.

5. Conclusion: The Ongoing I/O or io Evolution

Google's data-centric approach, focused on optimizing I/O within its massive infrastructure, and OpenAI's model-centric strategy, prioritizing efficient model architectures, represent two distinct but not mutually exclusive pathways in AI development. The future likely lies in a synergistic combination of both, leveraging the strengths of each approach to create more powerful, efficient, and ethical AI systems. Stay updated on the I/O debate and follow the I/O and io advancements to learn more about the future of I/O in AI, and how these competing approaches will continue to shape this rapidly evolving field.

Featured Posts

-

Moto Gp 2025 Analisis Klasemen Dan Peluang Marc Marquez

May 26, 2025

Moto Gp 2025 Analisis Klasemen Dan Peluang Marc Marquez

May 26, 2025 -

How Canada And Mexico Can Boost Trade Despite Us Tariffs

May 26, 2025

How Canada And Mexico Can Boost Trade Despite Us Tariffs

May 26, 2025 -

Bourse Payot 2024 Victoire Du Journaliste Belge Hugo De Waha

May 26, 2025

Bourse Payot 2024 Victoire Du Journaliste Belge Hugo De Waha

May 26, 2025 -

A Provocative Architects Take How Virtue Signalling Affects The Profession

May 26, 2025

A Provocative Architects Take How Virtue Signalling Affects The Profession

May 26, 2025 -

How 10 New Orleans Inmates Escaped Jail Undetected

May 26, 2025

How 10 New Orleans Inmates Escaped Jail Undetected

May 26, 2025

Latest Posts

-

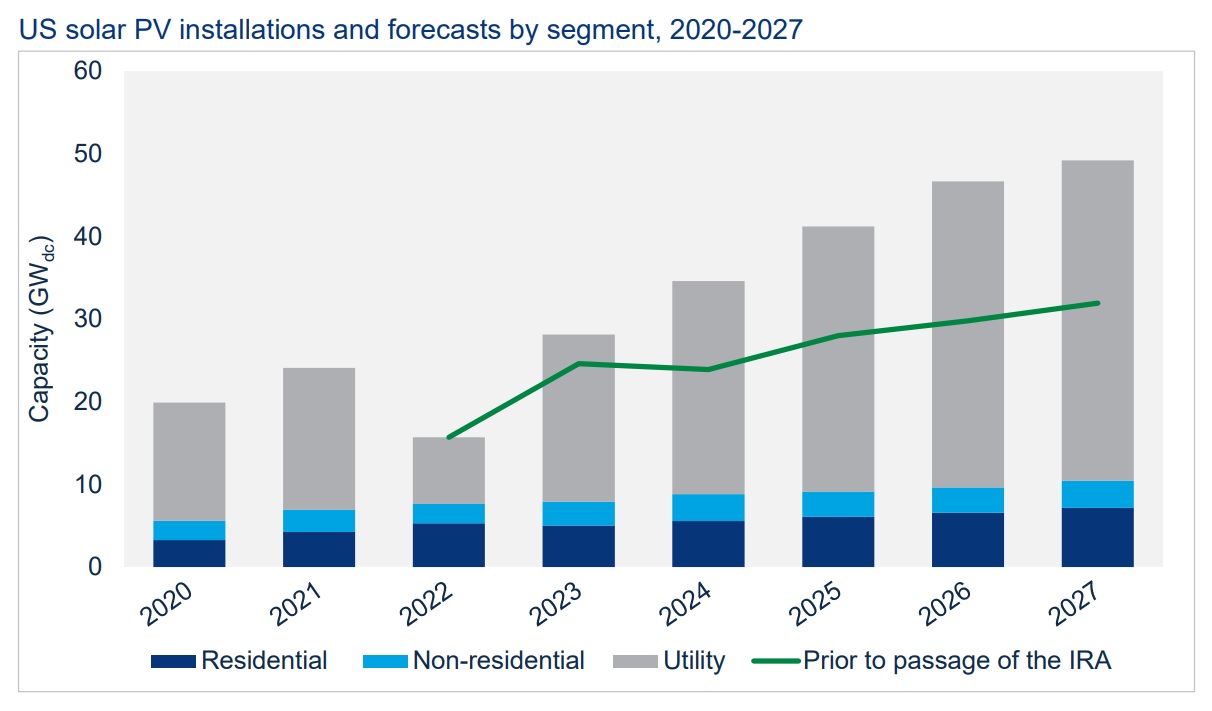

New Us Duties On Solar Imports From Southeast Asia Impact And Analysis

May 30, 2025

New Us Duties On Solar Imports From Southeast Asia Impact And Analysis

May 30, 2025 -

Us Imposes Solar Tariffs Malaysia Among Affected Countries

May 30, 2025

Us Imposes Solar Tariffs Malaysia Among Affected Countries

May 30, 2025 -

Malaysia Faces Us Solar Import Duties Impact On The Industry

May 30, 2025

Malaysia Faces Us Solar Import Duties Impact On The Industry

May 30, 2025 -

Waaree Premier Energies 8

May 30, 2025

Waaree Premier Energies 8

May 30, 2025 -

Increased Market Share Sought By Hanwha And Oci Following Us Solar Tariffs

May 30, 2025

Increased Market Share Sought By Hanwha And Oci Following Us Solar Tariffs

May 30, 2025