OpenAI Facing FTC Investigation: Concerns Over ChatGPT's Data Practices And AI Safety

Table of Contents

Data Privacy Concerns Regarding ChatGPT

The OpenAI FTC investigation is heavily focused on concerns about how ChatGPT handles user data. The vast amount of information collected and its potential misuse raise significant privacy flags.

Data Collection and Usage Transparency

OpenAI's data collection practices are a central point of the OpenAI FTC investigation. While OpenAI collects user inputs to train its models, the extent of this collection and the precise ways the data is used lack sufficient transparency.

- Data Collected: This includes not only user prompts and responses but also potentially metadata like IP addresses, browsing history (if integrated with a browser), and device information.

- Lack of Transparency: OpenAI's data usage policies may not clearly articulate how user data is employed beyond model training, leaving users uncertain about the potential for secondary uses.

- Potential for Misuse: The risk of personal data being misused, either intentionally or unintentionally, is a major concern, especially given the potential for identifying information to be extracted from seemingly anonymized datasets. This lack of transparency could lead to significant legal ramifications under regulations like the GDPR and CCPA, potentially resulting in hefty fines and reputational damage for OpenAI.

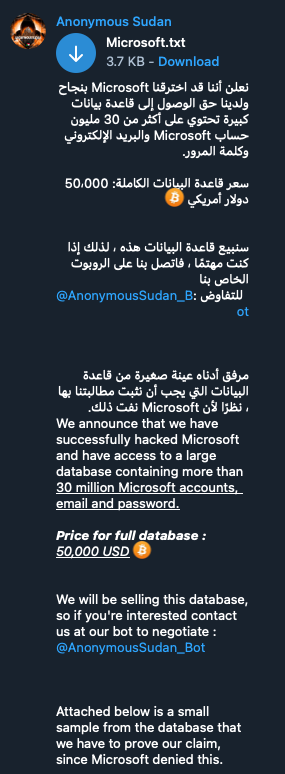

Data Security and Breach Risks

The security of the massive datasets used to train and operate ChatGPT is paramount. A breach could expose sensitive personal information, leading to significant harm for users.

- Existing Security Protocols: While OpenAI likely employs various security measures, the sheer scale and complexity of their systems make them vulnerable to potential attacks.

- Potential Vulnerabilities: The potential for vulnerabilities in their systems, whether through hacking or insider threats, remains a significant risk. Similar companies have experienced high-profile data breaches, highlighting the potential for catastrophic consequences.

- Consequences of a Breach: A data breach involving ChatGPT could expose millions of users' personal information, leading to identity theft, financial losses, and reputational damage for OpenAI. This underscores the urgency of strengthening data security measures.

Children's Data Protection

The collection and use of children's data by ChatGPT present specific legal concerns under the Children's Online Privacy Protection Act (COPPA).

- Underage User Data: The potential for ChatGPT to collect data from underage users without proper parental consent raises serious compliance issues.

- Potential Misuse of Children's Data: The vulnerability of children's data to exploitation and misuse demands stringent protection measures.

- COPPA Penalties: Non-compliance with COPPA can lead to severe penalties, including substantial fines and legal action.

AI Safety and Ethical Concerns Surrounding ChatGPT

Beyond data privacy, the OpenAI FTC investigation also examines the ethical and safety implications of ChatGPT's capabilities.

Bias and Discrimination in AI Outputs

ChatGPT, like many AI models, is trained on massive datasets that may reflect existing societal biases. This can lead to discriminatory or unfair outputs.

- Examples of Biased Outputs: ChatGPT may exhibit bias in its responses based on gender, race, religion, or other sensitive attributes, perpetuating harmful stereotypes.

- Ethical Implications: The deployment of biased AI systems can have significant societal consequences, exacerbating existing inequalities.

- Mitigating Bias: Addressing bias in AI requires careful data curation, algorithmic adjustments, and ongoing monitoring and evaluation of AI outputs.

Misinformation and Malicious Use of ChatGPT

The ability of ChatGPT to generate human-quality text raises concerns about its potential for misuse in spreading misinformation or for malicious purposes.

- Real-World Examples: ChatGPT could be used to create convincing deepfakes, generate sophisticated phishing emails, or spread propaganda on a massive scale.

- Challenges of Detection and Mitigation: Identifying and mitigating the malicious use of ChatGPT presents significant challenges.

- OpenAI's Role: OpenAI bears a significant responsibility in developing mechanisms to detect and prevent such misuse.

Lack of Accountability and Transparency in AI Decision-Making

The "black box" nature of many AI systems, including ChatGPT, makes it difficult to understand how they arrive at their outputs. This lack of transparency raises concerns about accountability.

- Explainable AI (XAI): The development of explainable AI is crucial for increasing trust and enabling effective oversight of AI systems.

- Implications for User Trust: The inability to understand how ChatGPT makes decisions erodes user trust and hinders the development of responsible AI practices.

- Need for Auditing: Mechanisms for auditing AI systems and their decision-making processes are necessary to ensure fairness and accountability.

Conclusion

The OpenAI FTC investigation highlights serious concerns about ChatGPT's data practices and AI safety. The potential consequences for OpenAI and the broader AI industry are significant. The investigation underscores the crucial need for robust regulations and ethical guidelines in the field of artificial intelligence to ensure responsible AI development and protect user rights. The OpenAI FTC investigation is a landmark case that will shape the future of AI regulation. Stay informed about future developments in this landmark case and the implications for responsible AI development.

Featured Posts

-

Ray Epps Defamation Lawsuit Against Fox News January 6th Allegations

May 04, 2025

Ray Epps Defamation Lawsuit Against Fox News January 6th Allegations

May 04, 2025 -

Aprils U S Jobs Report 177 000 Jobs Added Unemployment Rate At 4 2

May 04, 2025

Aprils U S Jobs Report 177 000 Jobs Added Unemployment Rate At 4 2

May 04, 2025 -

Security Case Causes Rift Prince Harry Says King Charles Is Silent

May 04, 2025

Security Case Causes Rift Prince Harry Says King Charles Is Silent

May 04, 2025 -

Lab Owners Guilty Plea In Covid 19 Test Result Fraud Case

May 04, 2025

Lab Owners Guilty Plea In Covid 19 Test Result Fraud Case

May 04, 2025 -

Effective Middle Management Key To A Thriving Company Culture

May 04, 2025

Effective Middle Management Key To A Thriving Company Culture

May 04, 2025

Latest Posts

-

Open Ai Unveils Streamlined Voice Assistant Creation At 2024 Event

May 04, 2025

Open Ai Unveils Streamlined Voice Assistant Creation At 2024 Event

May 04, 2025 -

16 Million Fine For T Mobile A Three Year Data Breach Investigation

May 04, 2025

16 Million Fine For T Mobile A Three Year Data Breach Investigation

May 04, 2025 -

Massive Office365 Data Breach Nets Hacker Millions Authorities Reveal

May 04, 2025

Massive Office365 Data Breach Nets Hacker Millions Authorities Reveal

May 04, 2025 -

Revolutionizing Voice Assistant Development Open Ais 2024 Announcement

May 04, 2025

Revolutionizing Voice Assistant Development Open Ais 2024 Announcement

May 04, 2025 -

Cybercriminal Makes Millions Targeting Executive Office365 Accounts

May 04, 2025

Cybercriminal Makes Millions Targeting Executive Office365 Accounts

May 04, 2025